July 17, 2025

July 16, 2025

We just got back from a family vacation exploring the Grand Canyon, Zion, and Bryce Canyon. As usual, I planned to write about our travels, but Vanessa, my wife, beat me to it.

She doesn't have a blog, but something about this trip inspired her to put pen to paper. When she shared her writing with me, I knew right away her words captured our vacation better than anything I could write.

Instead of starting from scratch, I asked if I could share her writing here. She agreed. I made light edits for publication, but the story and voice are hers. The photos and captions, however, are mine.

We just wrapped up our summer vacation with the boys, and this year felt like a real milestone. Axl graduated high school, so we let him have input on our destination. His request? To see some of the U.S. National Parks. His first pick was Yosemite, but traveling to California in July felt like gambling with wildfires. So we adjusted course, still heading west, but this time to the Grand Canyon, Zion and Bryce.

As it turned out, we didn't fully avoid the fire season. When we arrived at the Grand Canyon, we learned that wildfires had already been burning near Bryce for weeks. And by the time we were leaving Bryce, the Grand Canyon itself was under evacuation orders in certain areas due to its own active fires. We slipped through a safe window without disruption.

We kicked things off with a couple of nights in Las Vegas. The boys had never been, and it felt like a rite of passage. But after two days of blinking lights, slot machines, and entertainment, we were ready for something quieter. The highlight was seeing O by Cirque du Soleil at the Bellagio. The production had us wondering how many crew members it takes to make synchronized underwater acrobatics look effortless.

When the Hoover Dam was built, they used an enormous amount of concrete. If they had poured it all at once, it would have taken over a century to cool and harden. Instead, they poured it in blocks and used cooling pipes to manage the heat. Even today, the concrete is still hardening through a process called hydration, so the dam keeps getting stronger over time.

When the Hoover Dam was built, they used an enormous amount of concrete. If they had poured it all at once, it would have taken over a century to cool and harden. Instead, they poured it in blocks and used cooling pipes to manage the heat. Even today, the concrete is still hardening through a process called hydration, so the dam keeps getting stronger over time.

On the Fourth of July, we picked up our rental car and headed to the Hoover Dam for a guided tour. We learned it wasn't originally built to generate electricity, but rather to prevent downstream flooding from the Colorado River. Built in the 1930s, it's still doing its job. And fun fact: the concrete is still curing after nearly a century. It takes about 100 years to fully cure.

While we were at the Hoover Dam, we got the news that Axl was admitted to study civil engineering. A proud moment in a special place centered on engineering and ambition.

From there, we drove to the South Rim of the Grand Canyon and checked into El Tovar. When we say the hotel sits on the rim, we mean right on the rim. Built in 1905, it has hosted an eclectic list of notable guests, including multiple U.S. presidents, Albert Einstein, Liz Taylor, and Paul McCartney. Standing on the edge overlooking the canyon, we couldn't help but imagine them taking in the same view, the same golden light, the same vast silence. That sense of shared wonder, stretched across generations, made the moment special. No fireworks in the desert this Independence Day, but the sunset over the canyon was its own kind of magic.

The next morning, we hiked the Bright Angel Trail to the 3-mile resthouse. Rangers, staff, and even Google warned us to start early. But with teenage boys and jet lag, our definition of "early" meant hitting the trail by 8:30am. By 10am, a ranger reminded us that hiking after that hour is not advised. We pressed on carefully, staying hydrated and dunking our hats and shirts at every water source. Going down was warm. Coming up? Brutal. But we made it, sweaty and proud. Our reward: showers, naps, and well-earned ice cream.

Next up: Zion. We stopped at Horseshoe Bend on the way, a worthy detour with dramatic views of the Colorado River. By the time we entered Zion National Park, we were in total disbelief. The landscape was so perfectly sculpted it didn't look real. Towering red cliffs, hanging gardens, and narrow slot canyons surrounded us. I told Dries, "It's like we're driving through Disneyland", and I meant that in the best way.

We visited Horseshoe Bend, a dramatic curve in the Colorado River near the Grand Canyon. A quiet reminder of what time and a patient river can carve out together.

We visited Horseshoe Bend, a dramatic curve in the Colorado River near the Grand Canyon. A quiet reminder of what time and a patient river can carve out together.

After a long drive, we jumped in the shared pool at our rental house and met other first-time visitors who were equally blown away. That night, we celebrated our six year wedding anniversary with tacos and cocktails at a cantina inside a converted gas station. Nothing fancy, but a good memory.

One thing that stood out in Zion was the deer. They roamed freely through the neighborhoods and seemed unbothered by our presence. Every evening, a small group would quietly wander through our yard, grazing on grass and garden beds like they owned the place.

The next morning, we hiked The Narrows, wading through the Virgin River in full gear. Our guide shared stories and trail history, and most importantly, brought a charcuterie board. We hike for snacks, after all. Learning how indigenous communities thrived in these canyons for thousands of years gave us a deeper connection to the land, especially for me, as someone with Native heritage.

We hiked 7.5 miles through the Narrows in Zion National Park. Most of the hike is actually in the river itself, with towering canyon walls rising all around you. One of my favorite hikes ever.

We hiked 7.5 miles through the Narrows in Zion National Park. Most of the hike is actually in the river itself, with towering canyon walls rising all around you. One of my favorite hikes ever.

Taking a moment to look up and take it all in.

Taking a moment to look up and take it all in.

Wading forward together through the Narrows in Zion.

Wading forward together through the Narrows in Zion.

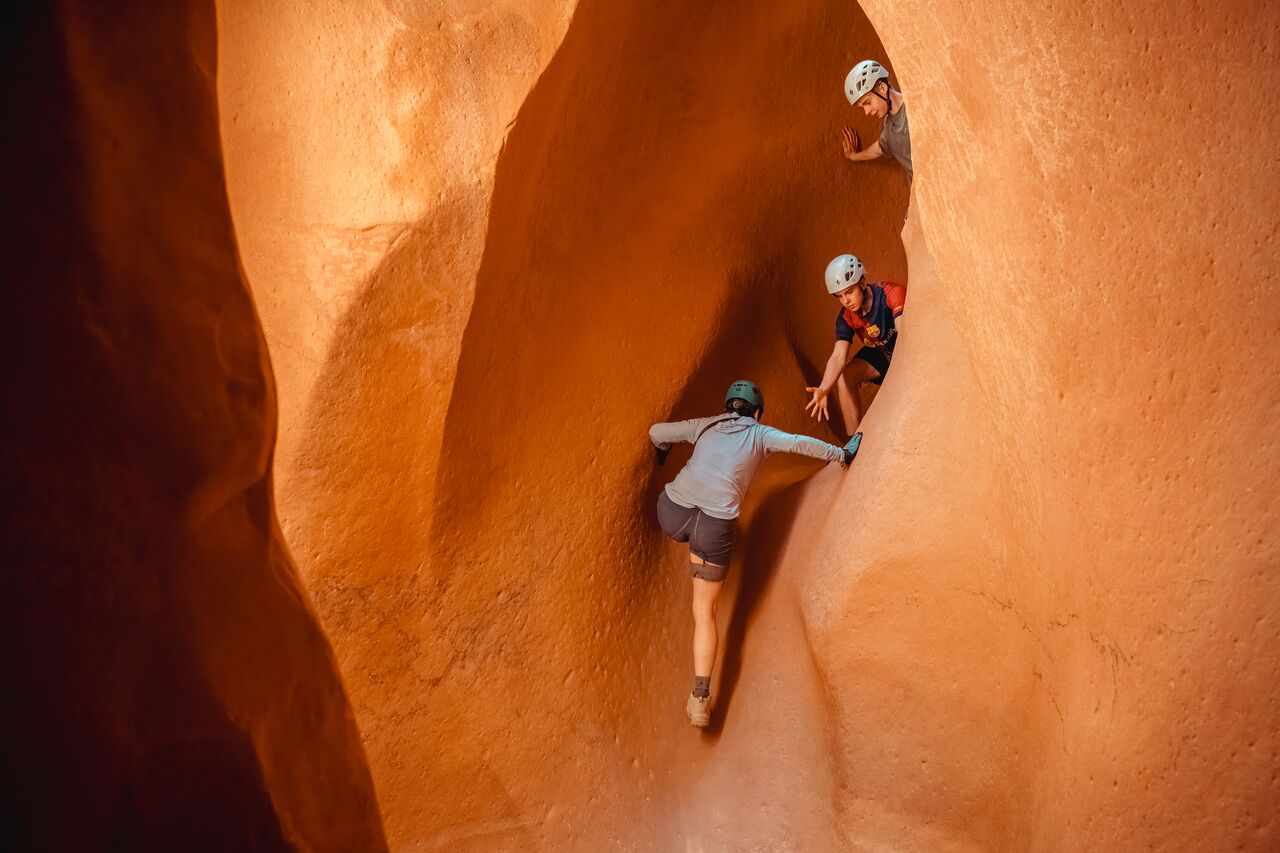

The following day was for rappelling, scrambling, and hiking. The boys were hyped, memories of rappelling in Spain had them convinced there would be waterfalls. Spoiler: there weren't. It hadn't rained in Zion for months. But dry riverbeds didn't dull the excitement. We even found shell fossils embedded in the sandstone. Proof the area was once underwater.

Time has a way of flipping the roles.

Time has a way of flipping the roles.

Getting ready to rappel in Zion, and enjoying every moment of it.

Getting ready to rappel in Zion, and enjoying every moment of it.

Making our way through the narrow slots in Zion.

Making our way through the narrow slots in Zion.

From Zion, we headed to Bryce Canyon. The forecast promised cooler temperatures, and we couldn't wait. We stayed at Under Canvas, a glamping site set in open range cattle territory. Canvas tents with beds and private bathrooms, but no electricity or WiFi. Cue the family debate: "Is this camping or hoteling?" Dries, Axl and Stan voted for "hoteling". I stood alone on "team camping". (Spoiler: it is camping when there are no outlets.) Without our usual creature comforts, we slowed down. We read. We played board games. We played cornhole. We watched sunsets and made s'mores.

Glamping in the high desert outside Bryce, Utah. Even in summer, the high elevation brings cool evenings, and the fire felt perfect after a day on the trail.

Glamping in the high desert outside Bryce, Utah. Even in summer, the high elevation brings cool evenings, and the fire felt perfect after a day on the trail.

The next day, we hiked the Fairyland Loop, eight miles along the rim with panoramic views into Bryce's otherworldly amphitheater of hoodoos. The towering spires and sculpted rock formations gave the park an almost storybook quality, as if the landscape had been carved by imagination rather than erosion. Though the temperature was cooler, the sun still packed a punch, so we were glad to finish before the midday heat. At night, the temperature dropped quickly once the sun went down. We woke up to 45°F (about 7°C) mornings, layering with whatever warm clothes we had packed, which, given we planned for desert heat, wasn't much.

One of our most memorable mornings came with a 4:30 am wake-up call to watch the sunrise at Sunrise Point. We had done something similar with the boys at Acadia in 2018. It's a tough sell at that hour, but always worth it. As the sun broke over the canyon, the hoodoos lit up in shades of orange and gold unlike anything we'd seen the day before. Afterward, we hiked Navajo Loop and Queen's Garden and were ready for a big breakfast at the lodge.

Up before sunrise to watch the hoodoos glow at Bryce Canyon in Utah. Cold and early, but unforgettable.

Up before sunrise to watch the hoodoos glow at Bryce Canyon in Utah. Cold and early, but unforgettable.

Vanessa making her way down through the hoodoos on the Navajo Loop in Bryce Canyon.

Vanessa making her way down through the hoodoos on the Navajo Loop in Bryce Canyon.

Later that day, we visited Mossy Cave Trail. We followed the stream, poked around the waterfall, and hunted for fossils. Axl and I were on a mission, cracking open sandstone rocks in hopes of finding hidden treasures. Mostly, we just made a mess (of ourselves). I did stumble upon a tiny sliver of geode ... nature's way of rewarding persistence, I suppose.

Before heading to Salt Lake City for laundry (yes, that's a thing after hiking in the desert for a week), we squeezed in one more thrill: whitewater rafting on the Sevier River. Our guide, Ryan, was part comedian, part chaos agent. His goal was to get the boys drenched! The Class II and III rapids were mellow but still a blast, especially since the river was higher than expected for July. We all stayed in the raft, mostly wet, mostly laughing.

Incredibly, throughout the trip, none of us got sunburned, despite hiking in triple digit heat, rappelling down canyon walls, and rafting under a cloudless sky. We each drank about 4 to 6 liters of water a day, and no one passed out, so we're calling it a win.

On our final evening, during dinner, I pulled out a vacation questionnaire I had created without telling anyone. Since the boys aren't always quick to share what they loved, I figured it was a better way to ask everyone to rate their experience. What did they love? What would they skip next time? What do they want more of, less of, or never again? It was a simple way to capture the moment, create conversation, reflect on what stood out, and maybe even help shape the next trip. Turns out, teens do have opinions, especially when sunrises and physical exertion are involved.

This trip was special. When I was a kid, I thought hiking and parks were boring. Now, it's what Dries and I seek out. We felt grateful we could hike, rappel, raft, and laugh alongside the boys. We created memories we hope they'll carry with them, long after this summer fades. We're proud of the young men they're becoming, and we can't wait for the next chapter in our family adventures.

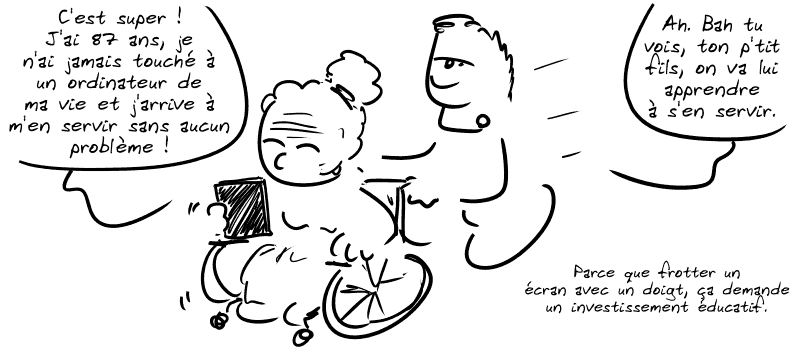

File deduplication isn’t just for massive storage arrays or backup systems—it can be a practical tool for personal or server setups too. In this post, I’ll explain how I use hardlinking to reduce disk usage on my Linux system, which directories are safe (and unsafe) to link, why I’m OK with the trade-offs, and how I automated it with a simple monthly cron job using a neat tool called hadori.

What Is Hardlinking?

What Is Hardlinking?

In a traditional filesystem, every file has an inode, which is essentially its real identity—the data on disk. A hard link is a different filename that points to the same inode. That means:

- The file appears to exist in multiple places.

- But there’s only one actual copy of the data.

- Deleting one link doesn’t delete the content, unless it’s the last one.

Compare this to a symlink, which is just a pointer to a path. A hardlink is a pointer to the data.

So if you have 10 identical files scattered across the system, you can replace them with hardlinks, and boom—nine of them stop taking up extra space.

Why Use Hardlinking?

Why Use Hardlinking?

My servers run a fairly standard Ubuntu install, and like most Linux machines, the root filesystem accumulates a lot of identical binaries and libraries—especially across /bin, /lib, /usr, and /opt.

That’s not a problem… until you’re tight on disk space, or you’re just a curious nerd who enjoys squeezing every last byte.

In my case, I wanted to reduce disk usage safely, without weird side effects.

Hardlinking is a one-time cost with ongoing benefits. It’s not compression. It’s not archival. But it’s efficient and non-invasive.

Which Directories Are Safe to Hardlink?

Which Directories Are Safe to Hardlink?

Hardlinking only works within the same filesystem, and not all directories are good candidates.

Safe directories:

Safe directories:

/bin,/sbin– system binaries/lib,/lib64– shared libraries/usr,/usr/bin,/usr/lib,/usr/share,/usr/local– user-space binaries, docs, etc./opt– optional manually installed software

These contain mostly static files: compiled binaries, libraries, man pages… not something that changes often.

Unsafe or risky directories:

Unsafe or risky directories:

/etc– configuration files, might change frequently/var,/tmp– logs, spools, caches, session data/home– user files, temporary edits, live data/dev,/proc,/sys– virtual filesystems, do not touch

If a file is modified after being hardlinked, it breaks the deduplication (the OS creates a copy-on-write scenario), and you’re back where you started—or worse, sharing data you didn’t mean to.

That’s why I avoid any folders with volatile, user-specific, or auto-generated files.

Risks and Limitations

Risks and Limitations

Hardlinking is not magic. It comes with sharp edges:

- One inode, multiple names: All links are equal. Editing one changes the data for all.

- Backups: Some backup tools don’t preserve hardlinks or treat them inefficiently.

➤ Duplicity, which I use, does not preserve hardlinks. It backs up each linked file as a full copy, so hardlinking won’t reduce backup size. - Security: Linking files with different permissions or owners can have unexpected results.

- Limited scope: Only works within the same filesystem (e.g., can’t link

/and/mntif they’re on separate partitions).

In my setup, I accept those risks because:

- I’m only linking read-only system files.

- I never link config or user data.

- I don’t rely on hardlink preservation in backups.

- I test changes before deploying.

In short: I know what I’m linking, and why.

What the Critics Say About Hardlinking

What the Critics Say About Hardlinking

Not everyone loves hardlinks—and for good reasons. Two thoughtful critiques are:

The core arguments:

- Hardlinks violate expectations about file ownership and identity.

- They can break assumptions in software that tracks files by name or path.

- They complicate file deletion logic—deleting one name doesn’t delete the content.

- They confuse file monitoring and logging tools, since it’s hard to tell if a file is “new” or just another name.

- They increase the risk of data corruption if accidentally modified in-place by a script that assumes it owns the file.

Why I’m still OK with it:

These concerns are valid—but mostly apply to:

- Mutable files (e.g., logs, configs, user data)

- Systems with untrusted users or dynamic scripts

- Software that relies on inode isolation or path integrity

In contrast, my approach is intentionally narrow and safe:

- I only deduplicate read-only system files in

/bin,/sbin,/lib,/lib64,/usr, and/opt. - These are owned by root, and only changed during package updates.

- I don’t hardlink anything under

/home,/etc,/var, or/tmp. - I know exactly when the cron job runs and what it targets.

So yes, hardlinks can be dangerous—but only if you use them in the wrong places. In this case, I believe I’m using them correctly and conservatively.

Does Hardlinking Impact System Performance?

Does Hardlinking Impact System Performance?

Good news: hardlinks have virtually no impact on system performance in everyday use.

Hardlinks are a native feature of Linux filesystems like ext4 or xfs. The OS treats a hardlinked file just like a normal file:

- Reading and writing hardlinked files is just as fast as normal files.

- Permissions, ownership, and access behave identically.

- Common tools (

ls,cat,cp) don’t care whether a file is hardlinked or not. - Filesystem caches and memory management work exactly the same.

The only difference is that multiple filenames point to the exact same data.

Things to keep in mind:

- If you edit a hardlinked file, all links see that change because there’s really just one file.

- Some tools (backup, disk usage) might treat hardlinked files differently.

- Debugging or auditing files can be slightly trickier since multiple paths share one inode.

But from a performance standpoint? Your system won’t even notice the difference.

Tools for Hardlinking

Tools for Hardlinking

There are a few tools out there:

fdupes– finds duplicates and optionally replaces with hardlinksrdfind– more sophisticated detectionhardlink– simple but limitedjdupes– high-performance fork of fdupes

About Hadori

About Hadori

From the Debian package description:

This might look like yet another hardlinking tool, but it is the only one which only memorizes one filename per inode. That results in less memory consumption and faster execution compared to its alternatives. Therefore (and because all the other names are already taken) it’s called “Hardlinking DOne RIght”.

Advantages over other tools:

- Predictability: arguments are scanned in order, each first version is kept

- Much lower CPU and memory consumption compared to alternatives

This makes hadori especially suited for system-wide deduplication where efficiency and reliability matter.

How I Use Hadori

How I Use Hadori

I run hadori once per month with a cron job. I used Ansible to set it up, but that’s incidental—this could just as easily be a line in /etc/cron.monthly.

Here’s the actual command:

/usr/bin/hadori --verbose /bin /sbin /lib /lib64 /usr /opt

This scans those directories, finds duplicate files, and replaces them with hardlinks when safe.

And here’s the crontab entry I installed (via Ansible):

roles:

- debops.debops.cron

cron__jobs:

hadori:

name: Hardlink with hadori

special_time: monthly

job: /usr/bin/hadori --verbose /bin /sbin /lib /lib64 /usr /opt

Which then created the file /etc/cron.d/hadori:

#Ansible: Hardlink with hadori

@monthly root /usr/bin/hadori --verbose /bin /sbin /lib /lib64 /usr /opt

What Are the Results?

What Are the Results?

After the first run, I saw a noticeable reduction in used disk space, especially in /usr/lib and /usr/share. On my modest VPS, that translated to about 300–500 MB saved—not huge, but non-trivial for a small root partition.

While this doesn’t reduce my backup size (Duplicity doesn’t support hardlinks), it still helps with local disk usage and keeps things a little tidier.

And because the job only runs monthly, it’s not intrusive or performance-heavy.

Final Thoughts

Final Thoughts

Hardlinking isn’t something most people need to think about. And frankly, most people probably shouldn’t use it.

But if you:

- Know what you’re linking

- Limit it to static, read-only system files

- Automate it safely and sparingly

…then it can be a smart little optimization.

With a tool like hadori, it’s safe, fast, and efficient. I’ve read the horror stories—and decided that in my case, they don’t apply.

This post was brought to you by a monthly cron job and the letters i-n-o-d-e.

This post was brought to you by a monthly cron job and the letters i-n-o-d-e.

July 09, 2025

A few weeks ago, I was knee-deep in CSV files. Not the fun kind. These were automatically generated reports from Cisco IronPort, and they weren’t exactly what I’d call analysis-friendly. Think: dozens of columns wide, thousands of rows, with summary data buried in awkward corners.

I was trying to make sense of incoming mail categories—Spam, Clean, Malware—and the numbers that went with them. Naturally, I opened the file in Excel, intending to wrangle the data manually like I usually do. You know: transpose the table, delete some columns, rename a few headers, calculate percentages… the usual grunt work.

But something was different this time. I noticed the “Get & Transform” section in Excel’s Data ribbon. I had clicked it before, but this time I gave it a real shot. I selected “From Text/CSV”, and suddenly I was in a whole new environment: Power Query Editor.

Wait, What Is Power Query?

Wait, What Is Power Query?

For those who haven’t met it yet, Power Query is a powerful tool in Excel (and also in Power BI) that lets you import, clean, transform, and reshape data before it even hits your spreadsheet. It uses a language called M, but you don’t really have to write code—although I quickly did, of course, because I can’t help myself.

In the editor, every transformation step is recorded. You can rename columns, remove rows, change data types, calculate new columns—all through a clean interface. And once you’re done, you just load the result into Excel. Even better: you can refresh it with one click when the source file updates.

From Curiosity to Control

From Curiosity to Control

Back to my IronPort report. I used Power Query to:

- Transpose the data (turn columns into rows),

- Remove columns I didn’t need,

- Rename columns to something meaningful,

- Convert text values to numbers,

- Calculate the percentage of each message category relative to the total.

All without touching a single cell in Excel manually. What would have taken 15+ minutes and been error-prone became a repeatable, refreshable process. I even added a “Percent” column that showed something like 53.4%—formatted just the way I wanted.

The Geeky Bit (Optional)

The Geeky Bit (Optional)

I quickly opened the Advanced Editor to look at the underlying M code. It was readable! With a bit of trial and error, I started customizing my steps, renaming variables for clarity, and turning a throwaway transformation into a well-documented process.

This was the moment it clicked: Power Query is not just a tool; it’s a pipeline.

Lessons Learned

Lessons Learned

- Sometimes it pays to explore what’s already in the software you use every day.

- Excel is much more powerful than most people realize.

- Power Query turns tedious cleanup work into something maintainable and even elegant.

- If you do something in Excel more than once, Power Query is probably the better way.

What’s Next?

What’s Next?

I’m already thinking about integrating this into more of my work. Whether it’s cleaning exported logs, combining reports, or prepping data for dashboards, Power Query is now part of my toolkit.

If you’ve never used it, give it a try. You might accidentally discover your next favorite tool—just like I did.

Have you used Power Query before? Let me know your tips or war stories in the comments!

July 02, 2025

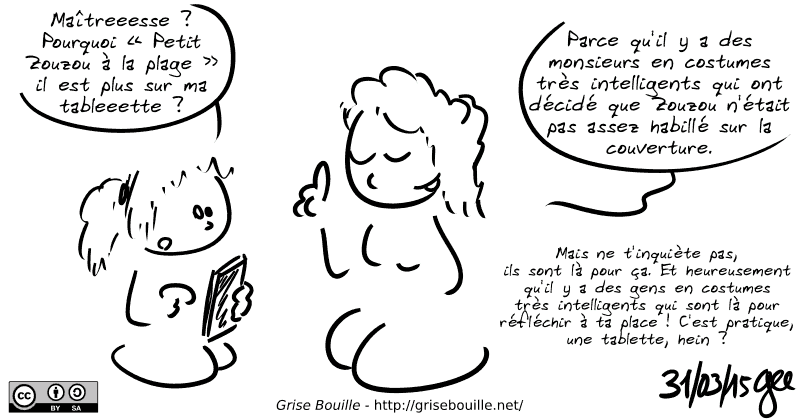

Lately, I’ve noticed something strange happening in online discussions: the humble em dash (—) is getting side-eyed as a telltale sign that a text was written with a so-called “AI.” I prefer the more accurate term: LLM (Large Language Model), because “artificial intelligence” is a bit of a stretch — we’re really just dealing with very complicated statistics

.

.

Now, I get it — people are on high alert, trying to spot generated content. But I’d like to take a moment to defend this elegant punctuation mark, because I use it often — and deliberately. Not because a machine told me to, but because it helps me think  .

.

A Typographic Tool, Not a Trend

The em dash has been around for a long time — longer than most people realize. The oldest printed examples I’ve found are in early 17th-century editions of Shakespeare’s plays, published by the printer Okes in the 1620s. That’s not just a random dash on a page — that’s four hundred years of literary service  . If Shakespeare’s typesetters were using em dashes before indoor plumbing was common, I think it’s safe to say they’re not a 21st-century LLM quirk.

. If Shakespeare’s typesetters were using em dashes before indoor plumbing was common, I think it’s safe to say they’re not a 21st-century LLM quirk.

The Tragedy of Othello, the Moor of Venice, with long dashes (typeset here with 3 dashes)

The Tragedy of Othello, the Moor of Venice, with long dashes (typeset here with 3 dashes)

A Dash for Thoughts

In Dutch, the em dash is called a gedachtestreepje — literally, a thought dash. And honestly? I think that’s beautiful. It captures exactly what the em dash does: it opens a little mental window in your sentence. It lets you slip in a side note, a clarification, an emotion, or even a complete detour — just like a sudden thought that needs to be spoken before it disappears. For someone like me, who often thinks in tangents, it’s the perfect punctuation.

Why I Use the Em Dash (And Other Punctuation Marks)

I’m autistic, and that means a few things for how I write. I tend to overshare and infodump — not to dominate the conversation, but to make sure everything is clear. I don’t like ambiguity. I don’t want anyone to walk away confused. So I reach for whatever punctuation tools help me shape my thoughts as precisely as possible:

- Colons help me present information in a tidy list — like this one.

- Brackets let me add little clarifications (without disrupting the main sentence).

- And em dashes — ah, the em dash — they let me open a window mid-sentence to give you extra context, a bit of tone, or a change in pace.

They’re not random. They’re intentional. They reflect how my brain works — and how I try to bridge the gap between thoughts and words  .

.

It’s Not Just a Line — It’s a Rhythm

There’s also something typographically beautiful about the em dash. It’s not a hyphen (-), and it’s not a middling en dash (–). It’s long and confident. It creates space for your eyes and your thoughts. Used well, it gives writing a rhythm that mimics natural speech, especially the kind of speech where someone is passionate about a topic and wants to take you on a detour — just for a moment — before coming back to the main road  .

.

I’m that someone.

Don’t Let the Bots Scare You

Yes, LLMs tend to use em dashes. So do thoughtful human beings. Let’s not throw centuries of stylistic nuance out the window because a few bots learned how to mimic good writing. Instead of scanning for suspicious punctuation, maybe we should pay more attention to what’s being said — and how intentionally  .

.

So if you see an em dash in my writing, don’t assume it came from a machine. It came from me — my mind, my style, my history with language. And I’m not going to stop using it just because an algorithm picked up the habit  .

.

July 01, 2025

AI is rewriting the rules of how we work and create. Expert developers can now build faster, non-developers can build software, research is accelerating, and human communication is improving. In the next 10 years, we'll probably see a 1,000x increase in AI demand. That is why Drupal is investing heavily in AI.

But at the same time, AI companies are breaking the web's fundamental economic model. This problem demands our attention.

The AI extraction problem

For 25 years, we built the Open Web on an implicit agreement: search engines could index our content because they sent users back to our websites. That model helped sustain blogs, news sites, and even open source projects.

AI companies broke that model. They train on our work and answer questions directly in their own interfaces, cutting creators out entirely. Anthropic's crawler reportedly makes 70,000 website requests for every single visitor it sends back. That is extraction, not exchange.

This is the Makers and Takers problem all over again.

The damage is real:

- Chegg, an online learning platform, filed an antitrust lawsuit against Google, claiming that AI-powered search answers have crushed their website traffic and revenue.

- Stack Overflow has seen a significant drop in daily active users and new questions (about 25-50%), as more developers turn to ChatGPT for faster answers.

- I recently spoke with a recipe blogger who is a solo entrepreneur. With fewer visitors, they're earning less from advertising. They poured their heart, craft, and sweat into creating a high-quality recipe website, but now they believe their small business won't survive.

None of this should surprise us. According to Similarweb, since Google launched "AI Overviews", the number of searches that result in no click-throughs has increased from 56% in May 2024 to 69% in May 2025, meaning users get their answers directly on the results page.

This "zero-click" phenomenon reinforces the shift I described in my 2015 post, "The Big Reverse of the Web". Ten years ago, I argued that the web was moving away from sending visitors out to independent sites and instead keeping them on centralized platforms, all in the name of providing a faster and more seamless user experience.

However, the picture isn't entirely negative. Some companies find that visitors from AI tools, while small in volume, convert at much higher rates. At Acquia, the company I co-founded, traffic from AI chatbots makes up less than 1 percent of total visitors but converts at over 6 percent, compared to typical rates of 2 to 3 percent. We are still relatively early in the AI adoption cycle, so time will tell how this trend evolves, how marketers adapt, and what new opportunities it might create.

Finding a new equilibrium

There is a reason this trend has taken hold: users love it. AI-generated answers provide instant, direct information without extra clicks. It makes traditional search engines look complicated by comparison.

But this improved user experience comes at a long-term cost. When value is extracted without supporting the websites and authors behind it, it threatens the sustainability of the content we all rely on.

I fully support improving the user experience. That should always come first. But it also needs to be balanced with fair support for creators and the Open Web.

We should design systems that share value more fairly among users, AI companies, and creators. We need a new equilibrium that sustains creative work, preserves the Open Web, and still delivers the seamless experiences users expect.

Some might worry it is already too late, since large AI companies have massive scraped datasets and can generate synthetic data to fill gaps. But I'm not so sure. The web will keep evolving for decades, and no model can stay truly relevant without fresh, high-quality content.

From voluntary rules to enforcement

We have robots.txt, a simple text file that tells crawlers which parts of a website they can access. But it's purely voluntary. Creative Commons launched CC Signals last week, allowing content creators to signal how AI can reuse their work. But both robots.txt and CC Signals are "social contracts" that are hard to enforce.

Today, Cloudflare announced they will default to blocking AI crawlers from accessing content. This change lets website owners decide whether to allow access and whether to negotiate compensation. Cloudflare handles 20% of all web traffic. When an AI crawler tries to access a website protected by Cloudflare, it must pass through Cloudflare's servers first. This allows Cloudflare to detect crawlers that ignore robots.txt directives and block them.

This marks a shift from purely voluntary signals to actual technical enforcement. Large sites could already afford their own infrastructure to detect and block crawlers or negotiate licensing deals directly. For example, Reddit signed a $60 million annual deal with Google to license its content for AI training.

However, most content creators, like you and I, can do neither.

Cloudflare's actions establish a crucial principle: AI training data has a price, and creators deserve to share in the value AI generates from their work.

The missing piece: content licensing marketplaces

Accessible enforcement infrastructure is step one, and Cloudflare now provides that. Step two would be a content licensing marketplace that helps broker deals between AI companies and content creators at any scale. This would move us from simply blocking to creating a fair economic exchange.

To the best of my knowledge, such marketplaces do not exist yet, but the building blocks are starting to emerge. Matthew Prince, CEO of Cloudflare, has hinted that Cloudflare may be working on building such a marketplace, and I think it is a great idea.

I don't know what that will look like, but I imagine something like Shutterstock for AI training data, combined with programmatic pricing like Google Ads. On Shutterstock, photographers upload images, set licensing terms, and earn money when companies license their photos. Google Ads automatically prices and places millions of ads without manual negotiations. A future content licensing marketplace could work in a similar way: creators would set licensing terms (like they do on Shutterstock), while automated systems manage pricing and transactions (as Google Ads does).

Today, only large platforms like Reddit can negotiate direct licensing deals with AI companies. A marketplace with programmatic pricing would make licensing accessible to creators of all sizes. Instead of relying on manual negotiations or being scraped for free, creators could opt into fair, programmatic licensing programs.

This would transform the dynamic from adversarial blocking to collaborative value creation. Creators get compensated. AI companies get legal, high-quality training data. Users benefit from better AI tools built on ethically sourced content.

Making the Open Web sustainable

We built the Open Web to democratize access to knowledge and online publishing. AI advances this mission of democratizing knowledge. But we also need to ensure the people who write, record, code, and share that knowledge aren't left behind.

The issue is not that AI exists. The problem is that we have not built economic systems to support the people and organizations that AI relies on. This affects independent bloggers, large media companies, and open source maintainers whose code and documentation train coding assistants.

Call me naive, but I believe AI companies want to work with content creators to solve this. Their challenge is that no scalable system exists to identify, contact, and pay millions of content creators.

Content creators lack tools to manage and monetize their rights. AI companies lack systems to discover and license content at scale. Cloudflare's move is a first step. The next step is building content licensing marketplaces that connect creators directly with AI companies.

The Open Web needs economic systems that sustain the people who create its content. There is a unique opportunity here: if content creators and AI companies build these systems together, we could create a stronger, more fair, and more resilient Web than we have had in 25 years. The jury is out on that, but one can dream.

Disclaimer: Acquia, my company, has a commercial relationship with Cloudflare, but this perspective reflects my long-standing views on sustainable web economics, not any recent briefings or partnerships.

June 25, 2025

Soms zit het mee, soms nét niet. Het herenhuis waar we helemaal verliefd op waren, is helaas aan iemand anders verhuurd. Jammer, maar we blijven niet bij de pakken zitten. We zoeken verder — en hopelijk kan jij ons daarbij helpen!

Wij zijn drie mensen die samen een huis willen delen in Gent. We vormen een warme, bewuste en respectvolle woongroep, en we dromen van een plek waar we rust, verbinding en creativiteit kunnen combineren.

Wie zijn wij?

Amedee (48): IT’er, balfolkdanser, amateurmuzikant, houdt van gezelschapsspelletjes en wandelen, auti en sociaal geëngageerd

Amedee (48): IT’er, balfolkdanser, amateurmuzikant, houdt van gezelschapsspelletjes en wandelen, auti en sociaal geëngageerd Chloë (bijna 52): Kunstenares, ex-Waldorfleerkracht en permacultuurontwerpster, houdt van creativiteit, koken en natuur

Chloë (bijna 52): Kunstenares, ex-Waldorfleerkracht en permacultuurontwerpster, houdt van creativiteit, koken en natuur Kathleen (54): Doodle-artiest met sociaal-culturele achtergrond, houdt van gezelligheid, buiten zijn en schrijft graag

Kathleen (54): Doodle-artiest met sociaal-culturele achtergrond, houdt van gezelligheid, buiten zijn en schrijft graag

We willen samen een huis vormen waar communicatie, zorgzaamheid en vrijheid centraal staan. Een plek waar je je thuis voelt, en waar ruimte is voor kleine activiteiten zoals een spelavond, een workshop, een creatieve sessie of gewoon rustig samen zijn.

Wat zoeken we?

Een huis (géén appartement) in Gent, op max. 15 minuten fietsen van station Gent-Sint-Pieters

Een huis (géén appartement) in Gent, op max. 15 minuten fietsen van station Gent-Sint-Pieters Energiezuinig: EPC B of beter

Energiezuinig: EPC B of beter Minstens 3 ruime slaapkamers van ±20m²

Minstens 3 ruime slaapkamers van ±20m² Huurprijs:

Huurprijs:

- tot €1650/maand voor 3 slaapkamers

- tot €2200/maand voor 4 slaapkamers

Extra ruimtes zoals een zolder, logeerkamer, atelier, bureau of hobbyruimte zijn heel welkom. We houden van luchtige, multifunctionele plekken die mee kunnen groeien met onze noden.

Beschikbaar: vanaf nu, ten laatste oktober

Beschikbaar: vanaf nu, ten laatste oktober

Heeft het huis 4 slaapkamers? Dan verwelkomen we graag een vierde huisgenoot die onze waarden deelt. Maar meer dan 4 bewoners willen we bewust vermijden — kleinschalig wonen werkt voor ons het best.

Ken jij iets? Laat van je horen!

Ken je een huis dat past in dit plaatje?

We staan open voor tips via immokantoren, vrienden, buren, collega’s of andere netwerken — alles helpt!

Contact: amedee@vangasse.eu

Contact: amedee@vangasse.eu

Dankjewel om mee uit te kijken — en delen mag altijd

June 24, 2025

In my post about digital gardening and public notes, I shared a principle I follow: "If a note can be public, it should be". I also mentioned using Obsidian for note-taking. Since then, various people have asked about my Obsidian setup.

I use Obsidian to collect ideas over time rather than to manage daily tasks or journal. My setup works like a Commonplace book, where you save quotes, thoughts, and notes to return to later. It is also similar to a Zettelkasten, where small, linked notes build deeper understanding.

What makes such note-taking systems valuable is how they help ideas grow and connect. When notes accumulate over time, connections start to emerge. Ideas compound slowly. What starts as scattered thoughts or quotes becomes the foundation for blog posts or projects.

Why plain text matters

One of the things I appreciate most about Obsidian is that it stores notes as plain text Markdown files on my local filesystem.

Plain text files give you full control. I sync them with iCloud, back them up myself, and track changes using Git. You can search them with command-line tools, write scripts to process them outside of Obsidian, or edit them in other applications. Your notes stay portable and usable any way you want.

Plus, plain text files have long-term benefits. Note-taking apps come and go, companies fold, subscription models shift. But plain text files remain accessible. If you want your notes to last for decades, they need to be in a format that stays readable, editable, and portable as technology changes. A Markdown file you write today will open just fine in 2050.

All this follows what Obsidian CEO Steph Ango calls the "files over apps" philosophy: your files should outlast the tools that create them. Don't lock your thinking into formats you might not be able to access later.

My tools

Before I dive into how I use Obsidian, it is worth mentioning that I use different tools for different types of thinking. Some people use Obsidian for everything – task management, journaling, notes – but I prefer to separate those.

For daily task management and meeting notes, I rely on my reMarkable Pro. A study titled The Pen Is Mightier Than the Keyboard by Mueller and Oppenheimer found that students who took handwritten notes retained concepts better than those who typed them. Handwriting meeting notes engages deeper cognitive processing than typing, which can improve understanding and memory.

For daily journaling and event tracking, I use a custom iOS app I built myself. I might share more about that another time.

Obsidian is where I grow long-term ideas. It is for collecting insights, connecting thoughts, and building a knowledge base that compounds over time.

How I capture ideas

In Obsidian, I organize my notes around topic pages. Examples are "Coordination challenges in Open Source", "Solar-powered websites", "Open Source startup lessons", or "How to be a good dad".

I have hundreds of these topic pages. I create a new one whenever an idea feels worth tracking.

Each topic page grows slowly over time. I add short summaries, interesting links, relevant quotes, and my own thoughts whenever something relevant comes up. The idea is to build a thoughtful collection of notes that deepens and matures over time.

Some notes stay short and focused. Others grow rich with quotes, links, and personal reflections. As notes evolve, I sometimes split them into more specific topics or consolidate overlapping ones.

I do not schedule formal reviews. Instead, notes come back to me when I search, clip a new idea, or revisit a related topic. A recent thought often leads me to something I saved months or years ago, and may prompt me to reorganize related notes.

Obsidian's core features help these connections deepen. I use tags, backlinks and graph view, to connect notes and reveal patterns between notes.

How I use notes

The biggest challenge with note-taking is not capturing ideas, but actually using them. Most notes get saved and then forgotten.

Some of my blog posts grow directly from these accumulated notes. Makers and Takers, one of my most-read blog posts, pre-dates Obsidian and did not come from this system. But if I write a follow-up, it will. I have a "Makers and Takers" note where relevant quotes and ideas are slowly accumulating.

As my collection of notes grows, certain notes keep bubbling up while others fade into the background. The ones that resurface again and again often signal ideas worth writing about or projects worth pursuing.

What I like about this process is that it turns note-taking into more than just storage. As I've said many times, writing is how I think. Writing pushes me to think, and it is the process I rely on to flesh out ideas. I do not treat my notes as final conclusions, but as ongoing conversations with myself. Sometimes two notes written months apart suddenly connect in a way I had not noticed before.

My plugin setup

Obsidian has a large plugin ecosystem that reminds me of Drupal's. I mostly stick with core plugins, but use the following community ones:

-

Dataview – Think of it as SQL queries for your notes. I use it to generate dynamic lists like

TABLE FROM #chess AND #opening AND #blackto see all my notes on chess openings for Black. It turns your notes into a queryable database. -

Kanban – Visual project boards for tracking progress on long-term ideas. I maintain Kanban boards for Acquia, Drupal, improvements to dri.es, and more. Unlike daily task management, these boards capture ideas that evolve over months or years.

-

Linter – Automatically formats my notes: standardizes headings, cleans up spacing, and more. It runs on save, keeping my Markdown clean.

-

Encrypt – Encrypts specific notes with password protection. Useful for sensitive information that I want in my knowledge base but need to keep secure.

-

Pandoc – Exports notes to Word documents, PDFs, HTML, and other formats using Pandoc.

-

Copilot – I'm still testing this, but the idea of chatting with your own knowledge base is compelling. You can also ask AI to help organize notes more effectively.

The Obsidian Web Clipper

The tool I'd actually recommend most isn't a traditional Obsidian plugin: it's the official Obsidian Web Clipper browser extension. I have it installed on my desktop and phone.

When I find something interesting online, I highlight it and clip it directly into Obsidian. This removes friction from the process.

I usually save just a quote or a short section of an article, not the whole article. Some days I save several clips. Other days, I save none at all.

Why this works

For me, Obsidian is not just a note-taking tool. It is a thinking environment. It gives me a place to collect ideas, let them mature, and return to them when the time is right. I do not aim for perfect organization. I aim for a system that feels natural and helps me notice connections I would otherwise miss.

June 22, 2025

OpenTofu

Terraform or OpenTofu (the open-source fork supported by the Linux Foundation) is a nice tool to setup the infrastructure on different cloud environments. There is also a provider that supports libvirt.

If you want to get started with OpenTofu there is a free training available from the Linux foundation:

I also joined the talk about OpenTofu and Infrastructure As Code, in general, this year in the Virtualization and Cloud Infrastructure DEV Room at FOSDEM this year:

I’ll not start to explain “Declarative” vs “Imperative” in this blog post, there’re already enough blog posts or websites that’re (trying) to explain this in more detail (the links above are a good start).

The default behaviour of OpenTofu is not to try to update an existing environment. This makes it usable to create disposable environments.

Tails

Tails is a nice GNU/Linux distribution to connect to the Tor network.

Personally, I’m less into the “privacy” aspect of the Tor network (although being aware that you’re tracked and followed is important), probably because I’m lucky to live in the “Free world”.

For people who are less lucky (People who live in a country where freedom of speech isn’t valued) or journalists for example, there’re good reasons to use the Tor network and hide their internet traffic.

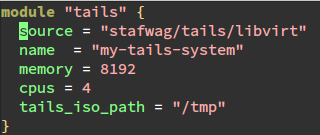

tails/libvirt Terraform/OpenTofu module

To make it easier to spin up a virtual machine with the latest tail environment I created a Terraform/OpenTofu module to spin up a virtual machine with the latest Tails version on libvirt.

There’re security considerations when you run tails in a virtual machine. See

for more information.

The source code of the module is available at the git repository:

The module is published on the Terraform Registry and the OpenTofu Registry.

Have fun!

June 18, 2025

Heb jij altijd al willen samenwonen met fijne mensen in een warme, open en respectvolle sfeer? Dan is dit misschien wel iets voor jou.

Samen met twee vrienden ben ik een nieuwe cohousing aan het opstarten in Gent. We hebben een prachtig gerenoveerd herenhuis op het oog, en we zijn op zoek naar een vierde persoon om het huis mee te delen.

Het huis

Het gaat om een ruim en karaktervol herenhuis met energielabel B+. Het beschikt over:

Vier volwaardige slaapkamers van elk 18 à 20 m²

Eén extra kamer die we kunnen inrichten als logeerkamer, bureau of hobbyruimte

Twee badkamers

Twee keukens

Een zolder met stevige balken — de creatieve ideeën borrelen al op!

De ligging is uitstekend: aan de Koning Albertlaan, op amper 5 minuten fietsen van station Gent-Sint-Pieters en 7 minuten van de Korenmarkt. De huurprijs is €2200 in totaal, wat neerkomt op €550 per persoon bij vier bewoners.

Het huis is al beschikbaar vanaf 1 juli 2025.

Wie zoeken we?

We zoeken iemand die zich herkent in een aantal gedeelde waarden en graag deel uitmaakt van een respectvolle, open en bewuste leefomgeving. Concreet betekent dat voor ons:

Je staat open voor diversiteit in al haar vormen

Je bent respectvol, communicatief en houdt rekening met anderen

Je hebt voeling met thema’s zoals inclusie, mentale gezondheid, en samenleven met aandacht voor elkaar

Je hebt een rustig karakter en draagt graag bij aan een veilige, harmonieuze sfeer in huis

Leeftijd is niet doorslaggevend, maar omdat we zelf allemaal 40+ zijn, zoeken we eerder iemand die zich in die levensfase herkent

Iets voor jou?

Voel je een klik met dit verhaal? Of heb je vragen en wil je ons beter leren kennen? Aarzel dan niet om contact op te nemen via amedee@vangasse.eu.

Is dit niets voor jou, maar ken je iemand die perfect zou passen in dit plaatje? Deel dan zeker deze oproep — dank je wel!

Samen kunnen we van dit huis een warme thuis maken.

A few years ago, I quietly adopted a small principle that has changed how I think about publishing on my website. It's a principle I've been practicing for a while now, though I don't think I've ever written about it publicly.

The principle is: If a note can be public, it should be.

It sounds simple, but this idea has quietly shaped how I treat my personal website.

I was inspired by three overlapping ideas: digital gardens, personal memexes, and "Today I Learned" entries.

Writers like Tom Critchlow, Maggie Appleton, and Andy Matuschak maintain what they call digital gardens. They showed me that a personal website does not have to be a collection of polished blog posts. It can be a living space where ideas can grow and evolve. Think of it more as an ever-evolving notebook than a finished publication, constantly edited and updated over time.

I also learned from Simon Willison, who publishes small, focused Today I Learned (TIL) entries. They are quick, practical notes that capture a moment of learning. They don't aim to be comprehensive; they simply aim to be useful.

And then there is Cory Doctorow. In 2021, he explained his writing and publishing workflow, which he describes as a kind of personal memex. A memex is a way to record your knowledge and ideas over time. While his memex is not public, I found his approach inspiring.

I try to take a lot of notes. For the past four years, my tool of choice has been Obsidian. It is where I jot things down, think things through, and keep track of what I am learning.

In Obsidian, I maintain a Zettelkasten system. It is a method for connecting ideas and building a network of linked thoughts. It is not just about storing information but about helping ideas grow over time.

At some point, I realized that many of my notes don't contain anything private. If they're useful to me, there is a good chance they might be useful to someone else too. That is when I adopted the principle: If a note can be public, it should be.

So a few years ago, I began publishing these kinds of notes on my site. You might have seen examples like Principles for life, PHPUnit tests for Drupal, Brewing coffee with a moka pot when camping or Setting up password-free SSH logins.

These pages on my website are not blog posts. They are living notes. I update them as I learn more or come back to the topic. To make that clear, each note begins with a short disclaimer that says what it is. Think of it as a digital notebook entry rather than a polished essay.

Now, I do my best to follow my principle, but I fall short more than I care to admit. I have plenty of notes in Obsidian that could have made it to my website but never did.

Often, it's simply inertia. Moving a note from Obsidian to my Drupal site involves a few steps. While not difficult, these steps consume time I don't always have. I tell myself I'll do it later, and then 'later' often never arrives.

Other times, I hold back because I feel insecure. I am often most excited to write when I am learning something new, but that is also when I know the least. What if I misunderstood something? The voice of doubt can be loud enough to keep a note trapped in Obsidian, never making it to my website.

But I keep pushing myself to share in public. I have been learning in the open and sharing in the open for 25 years, and some of the best things in my life have come from that. So I try to remember: if notes can be public, they should be.

June 11, 2025

I am excited to share some wonderful news—Sibelga and Passwerk have recently published a testimonial about my work, and it has been shared across LinkedIn, Sibelga’s website, and even on YouTube!

LinkedIn Post:

LinkedIn Post:

Sibelga – Testimonial with Passwerk

A brief but impactful post summarizing the collaboration. Article on Sibelga.be (Dutch):

Article on Sibelga.be (Dutch):

Wanneer anders zijn een sterkte wordt

The full article dives deeper into the story and the value of inclusive hiring. YouTube Video:

YouTube Video:

Passwerk @ Sibelga – Testimonial

A short video in which I talk about my experience working at Sibelga through Passwerk.

What Is This All About?

Passwerk is an organisation that matches talented individuals on the autism spectrum with roles in IT and software testing, creating opportunities based on strengths and precision. I have been working with them as a consultant, currently placed at Sibelga, Brussels’ electricity and gas distribution network operator.

The article and video highlight how being “different” does not have to be a limitation—in fact, it can be a real asset in the right context. It means a lot to me to be seen and appreciated for who I am and the quality of my work.

Why This Matters

For many neurodivergent people, the professional world can be full of challenges that go beyond the work itself. Finding the right environment—one that values accuracy, focus, and dedication—can be transformative.

I am proud to be part of a story that shows what is possible when companies look beyond stereotypes and embrace neurodiversity as a strength.

Thank you to Sibelga, Passwerk, and everyone who contributed to this recognition. It is an honour to be featured, and I hope this story inspires more organisations to open up to diverse talents.

Want to know more? Check out the article or watch the video!

Want to know more? Check out the article or watch the video!

June 09, 2025

Imagine a marketer opening Drupal and with a clear goal in mind: launch a campaign for an upcoming event.

They start by uploading a brand kit to Drupal CMS: logos, fonts, and color palette. They define the campaign's audience as mid-sized business owners interested in digital transformation. Then they create a creative guide that outlines the event's goals, key messages, and tone.

With this in place, AI agents within Drupal step in to assist. Drawing from existing content and media, the agents help generate landing pages, each optimized for a specific audience segment. They suggest headlines, refine copy based on the creative guide, create components based on the brand kit, insert a sign-up form, and assemble everything into cohesive, production-ready pages.

Using Drupal's built-in support for the Model Context Protocol (MCP), the AI agents connect to analytics tools and monitor performance. If a page is not converting well, the system makes overnight updates. It might adjust layout, improve clarity, or refine the calls to action.

Every change is tracked. The marketer can review, approve, revert, or adjust anything. They stay in control, even as the system takes on more of the routine work.

Why it matters

AI is changing how websites are built and managed faster than most people expected. The digital experience space is shifting from manual workflows to outcome-driven orchestration. Instead of building everything from scratch, users will set goals, and AI will help deliver results.

This future is not about replacing people. It is about empowering them. It is about freeing up time for creative and strategic work while AI handles the rest. AI will take care of routine tasks, suggest improvements, and respond to real-time feedback. People will remain in control, but supported by powerful new tools that make their work easier and faster.

The path forward won't be perfect. Change is never easy, and there are still many lessons to learn, but standing still isn't an option. If we want AI to head in the right direction, we have to help steer it. We are excited to move fast, but just as committed to doing it thoughtfully and with purpose.

The question is not whether AI will change how we build websites, but how we as a community will shape that change.

A coordinated push forward

Drupal already has a head start in AI. At DrupalCon Barcelona 2024, I showed how Drupal's AI tools help a site creator market wine tours. Since then, we have seen a growing ecosystem of AI modules, active integrations, and a vibrant community pushing boundaries. Today, about 1,000 people are sharing ideas and collaborating in the #ai channel on Drupal Slack.

At DrupalCon Atlanta in March 2025, I shared our latest AI progress. We also brought together key contributors working on AI in Drupal. Our goal was simple: get organized and accelerate progress. After the event, the group committed to align on a shared vision and move forward together.

Since then, this team has been meeting regularly, almost every day. I've been working with the team to help guide the direction. With a lot of hard work behind us, I'm excited to introduce the Drupal AI Initiative.

The Drupal AI Initiative builds on the momentum in our community by bringing structure and shared direction to the work already in progress. By aligning around a common strategy, we can accelerate innovation.

What we're launching today

The Drupal AI Initiative is closely aligned with the broader Drupal CMS strategy, particularly in its focus on making site building both faster and easier. At the same time, this work is not limited to Drupal CMS. It is also intended to benefit people building custom solutions on Drupal Core, as well as those working with alternative distributions of Drupal.

To support this initiative, we are announcing:

- A clear strategy to guide Drupal's AI vision and priorities (PDF mirror).

- A Drupal AI leadership team to drive product direction, fundraising, and collaboration across work tracks.

- A funded delivery team focused on execution, with the equivalent of several full-time roles already committed, including technical leads, UX and project managers, and release coordination.

- Active work tracks covering areas like AI Core, AI Products, AI Marketing, and AI UX.

- USD $100,000 in operational funding, contributed by the initiative's founding companies.

For more details, read the full announcement on the Drupal AI Initiative page on Drupal.org.

Founding members and early support

Some of the founding members of the Drupal AI initiative during our launch call on Google Hangouts.

Some of the founding members of the Drupal AI initiative during our launch call on Google Hangouts.

Over the past few months, we've invested hundreds of hours shaping our AI strategy, defining structure, and taking first steps.

I want to thank the founding members of the Drupal AI Initiative. These individuals and organizations played a key role in getting things off the ground. The list is ordered alphabetically by last name to recognize all contributors equally:

- Jamie Abrahams (FreelyGive) – Innovation and AI architecture

- Baddý Breidert (1xINTERNET) – Governance, funding, and coordination

- Christoph Breidert (1xINTERNET) – Product direction and roadmap

- Dries Buytaert (Acquia / Drupal) – Strategic oversight and direction

- Dominique De Cooman (Dropsolid) – Fundraising and business alignment

- Marcus Johansson (FreelyGive) – Technical leadership

- Paul Johnson (1xINTERNET) – Marketing and outreach

- Kristen Pol (Salsa Digital) – Cross-team alignment and contributor engagement

- Lauri Timmanee (Acquia) – Experience Builder AI integration

- Frederik Wouters (Dropsolid) – Communications and outreach

These individuals, along with the companies supporting them, have already contributed significant time, energy, and funding. I am grateful for their early commitment.

I also want to thank the staff at the Drupal Association and the Drupal CMS leadership team for their support and collaboration.

What comes next

I'm glad the Drupal AI Initiative is now underway. The Drupal AI strategy is published, the structure is in place, and multiple work tracks are open and moving forward. We'll share more details and updates in the coming weeks.

With every large initiative, we are evolving how we organize, align, and collaborate. The Drupal AI Initiative builds on that progress. As part of that, we are also exploring more ways to recognize and reward meaningful contributions.

We are creating ways for more of you to get involved with Drupal AI. Whether you are a developer, designer, strategist, or sponsor, there is a place for you in this work. If you're part of an agency, we encourage you to step forward and become a Maker. The more agencies that contribute, the more momentum we build.

Update: In addition to the initiative's founding members, Amazee.io already stepped forward with another commitment of USD $20,000 and one full-time contributor. Thank you! This brings the total operating budget to USD $120,000. Please consider joining as well.

AI is changing how websites and digital experiences are built. This is our moment to be part of the change and help define what comes next.

Join us in the #ai-initiative channel on Drupal Slack to get started.

June 08, 2025

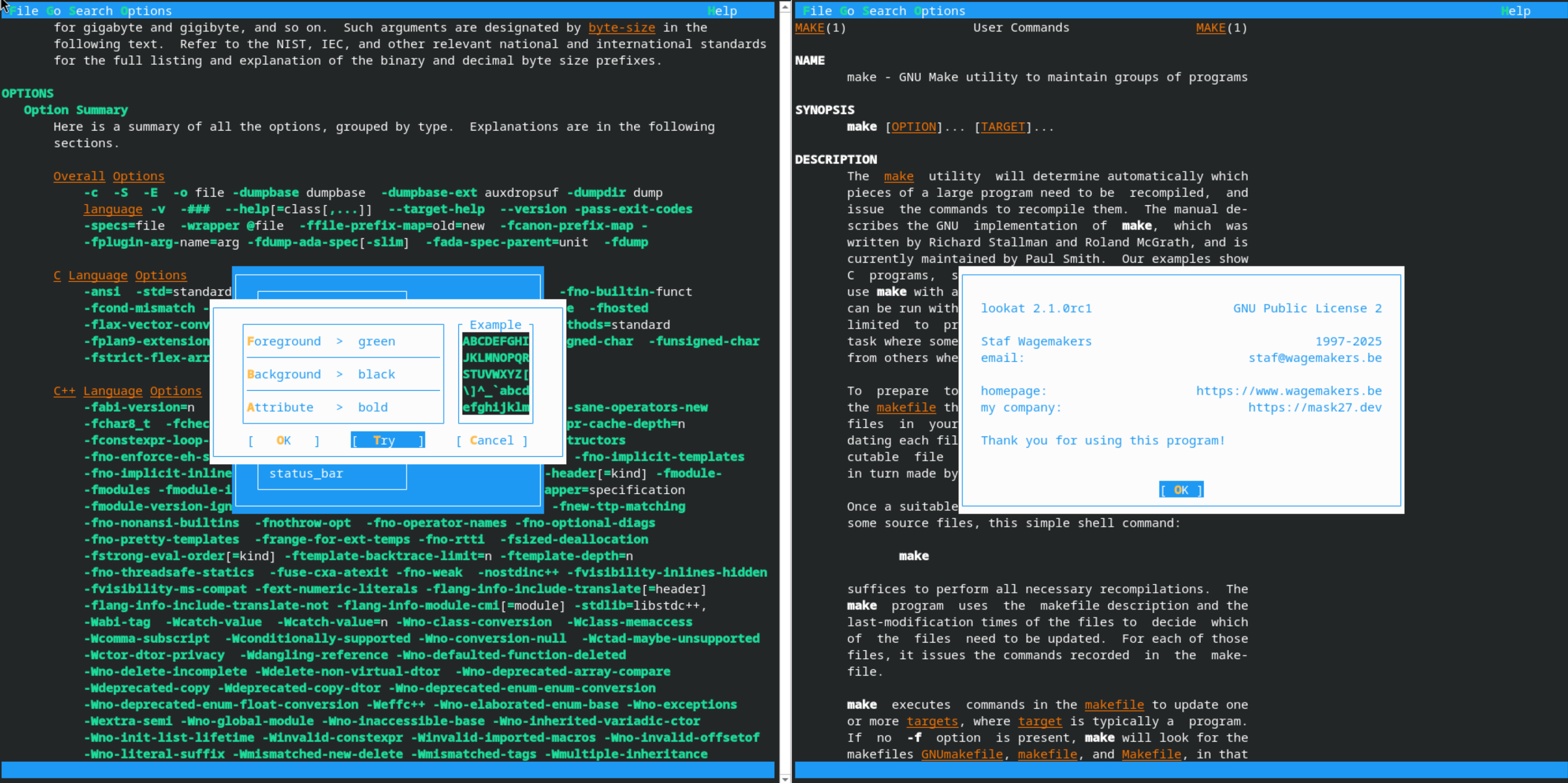

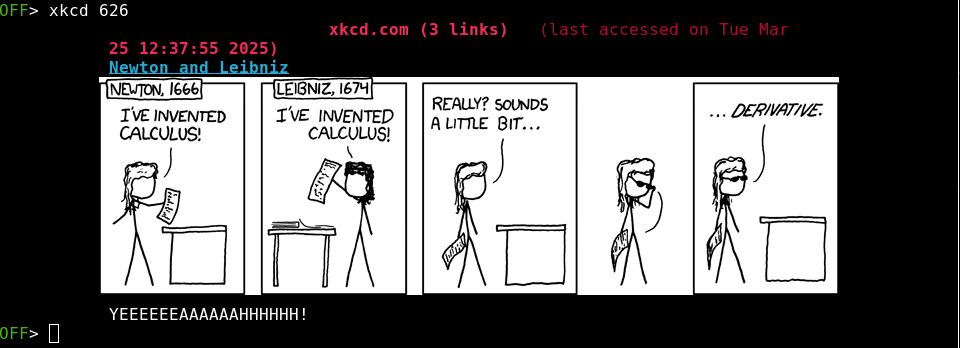

Lookat 2.1.0rc1 is the latest development release of Lookat/Bekijk, a user-friendly Unix file browser/viewer that supports colored man pages.

The focus of the 2.1.0 release is to add ANSI Color support.

News

8 Jun 2025 Lookat 2.1.0rc1 Released

Lookat 2.1.0rc1 is the first release candicate of Lookat 2.1.0

ChangeLog

Lookat / Bekijk 2.1.0rc1

- ANSI Color support

Lookat 2.1.0rc1 is available at:

- https://www.wagemakers.be/english/programs/lookat/

- Download it directly from https://download-mirror.savannah.gnu.org/releases/lookat/

- Or at the Git repository at GNU savannah https://git.savannah.gnu.org/cgit/lookat.git/

Have fun!

June 04, 2025

A few weeks ago, I set off for Balilas, a balfolk festival in Janzé (near Rennes), Brittany (France). I had never been before, but as long as you have dance shoes, a tent, and good company, what more do you need?

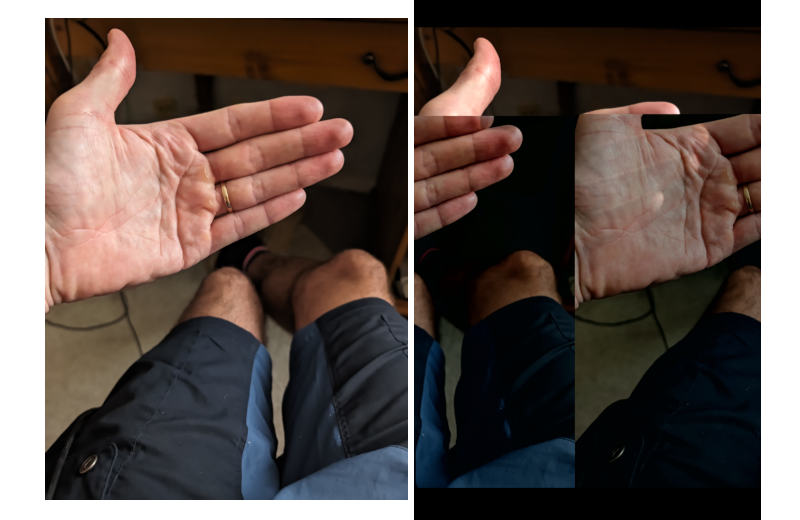

Bananas for scale

Bananas for scale

From Ghent to Brittany… with Two Dutch Strangers

My journey began in Ghent, where I was picked up by Sterre and Michelle, two dancers from the Netherlands. I did not know them too well beforehand, but in the balfolk world, that is hardly unusual — de balfolkcommunity is één grote familie — one big family.

We took turns driving, chatting, laughing, and singing along. Google Maps logged our total drive time at 7 hours and 39 minutes.

Google knows everything

Google knows everything

Péage – one of the many

Péage – one of the many

Along the way, we had the perfect soundtrack: French Road Trip

French Road Trip

— 7 hours and 49 minutes of French and Francophone tubes.

— 7 hours and 49 minutes of French and Francophone tubes.

https://open.spotify.com/playlist/3jRMHCl6qVmVIqXrASAAmZ?si=746a7f78ca30488a

https://open.spotify.com/playlist/3jRMHCl6qVmVIqXrASAAmZ?si=746a7f78ca30488a

A Tasty Stop in Pré-en-Pail-Saint-Samson

A Tasty Stop in Pré-en-Pail-Saint-Samson

Somewhere around dinner time, we stopped at La Sosta, a cozy Italian restaurant in Pré-en-Pail-Saint-Samson (2300 inhabitants). I had a pizza normande — base tomate, andouille, pomme, mozzarella, crème, persil . A delicious and unexpected regional twist — definitely worth remembering!

pizza normande

pizza normande

The pizzas wereexcellent, but also generously sized — too big to finish in one sitting. Heureusement, ils nous ont proposé d’emballer le reste à emporter. That was a nice touch — and much appreciated after a long day on the road.

Just to much to eat it all

Just to much to eat it all

Arrival Just Before Dark

Arrival Just Before Dark

We arrived at the Balilas festival site five minutes after sunset, with just enough light left to set up our tents before nightfall. Trugarez d’an heol — thank you, sun, for holding out a little longer.

There were two other cars filled with people coming from the Netherlands, but they had booked a B&B. We chose to camp on-site to soak in the full festival atmosphere.

Enjoy the view!

Enjoy the view!

Banana pancakes!

Banana pancakes!

Balilas itself was magical: days and nights filled with live music, joyful dancing, friendly faces, and the kind of warm atmosphere that defines balfolk festivals.

Photo: Poppy Lens

Photo: Poppy Lens

More info and photos: balilas.lesviesdansent.bzh

balilas.lesviesdansent.bzh @balilas.balfolk on Instagram

@balilas.balfolk on Instagram

Balfolk is more than just dancing. It is about trust, openness, and sharing small adventures with people you barely know—who somehow feel like old friends by the end of the journey.

Tot de volgende — à la prochaine — betek ar blez a zeu!

Thank you Maï for proofreading the Breton expressions.

May 28, 2025

In the world of DevOps and continuous integration, automation is essential. One fascinating way to visualize the evolution of a codebase is with Gource, a tool that creates animated tree diagrams of project histories.

Recently, I implemented a GitHub Actions workflow in my ansible-servers repository to automatically generate and deploy Gource visualizations. In this post, I will walk you through how the workflow is set up and what it does.

But first, let us take a quick look back…

Back in 2013: Visualizing Repos with Bash and XVFB

Back in 2013: Visualizing Repos with Bash and XVFB

More than a decade ago, I published a blog post about Gource (in Dutch) where I described a manual workflow using Bash scripts. At that time, I ran Gource headlessly using xvfb-run, piped its output through pv, and passed it to ffmpeg to create a video.

It looked something like this:

#!/bin/bash -ex

xvfb-run -a -s "-screen 0 1280x720x24" \

gource \

--seconds-per-day 1 \

--auto-skip-seconds 1 \

--file-idle-time 0 \

--max-file-lag 1 \

--key -1280x720 \

-r 30 \

-o - \

| pv -cW \

| ffmpeg \

-loglevel warning \

-y \

-b:v 3000K \

-r 30 \

-f image2pipe \

-vcodec ppm \

-i - \

-vcodec libx264 \

-preset ultrafast \

-pix_fmt yuv420p \

-crf 1 \

-threads 0 \

-bf 0 \

../gource.mp4

This setup worked well for its time and could even be automated via cron or a Git hook. However, it required a graphical environment workaround and quite a bit of shell-fu.

From Shell Scripts to GitHub Actions

From Shell Scripts to GitHub Actions

Fast forward to today, and things are much more elegant. The modern Gource workflow lives in .github/workflows/gource.yml and is:

Reusable through

Reusable through workflow_call Manually triggerable via

Manually triggerable via workflow_dispatch Integrated into a larger CI/CD pipeline (

Integrated into a larger CI/CD pipeline (pipeline.yml) Cloud-native, with video output stored on S3

Cloud-native, with video output stored on S3

Instead of bash scripts and virtual framebuffers, I now use a well-structured GitHub Actions workflow with clear job separation, artifact management, and summary reporting.

What the New Workflow Does

What the New Workflow Does

The GitHub Actions workflow handles everything automatically:

Decides if a new Gource video should be generated, based on time since the last successful run.

Decides if a new Gource video should be generated, based on time since the last successful run. Generates a Gource animation and a looping thumbnail GIF.

Generates a Gource animation and a looping thumbnail GIF. Uploads the files to an AWS S3 bucket.

Uploads the files to an AWS S3 bucket. Posts a clean summary with links, preview, and commit info.

Posts a clean summary with links, preview, and commit info.

It supports two triggers:

workflow_dispatch(manual run from the GitHub UI)workflow_call(invoked from other workflows likepipeline.yml)

You can specify how frequently it should run with the skip_interval_hours input (default is every 24 hours).

Smart Checks Before Running

Smart Checks Before Running

To avoid unnecessary work, the workflow first checks:

- If the workflow file itself was changed.

- When the last successful run occurred.

- Whether the defined interval has passed.

Only if those conditions are met does it proceed to the generation step.

Building the Visualization

Building the Visualization

Step-by-step:

Step-by-step:

- Checkout the Repo

Usesactions/checkoutwithfetch-depth: 0to ensure full commit history. - Generate Gource Video

Usesnbprojekt/gource-actionwith configuration for avatars, title, and resolution. - Install FFmpeg

UsesAnimMouse/setup-ffmpegto enable video and image processing. - Create a Thumbnail

Extracts preview frames and assembles a looping GIF for visual summaries. - Upload Artifacts

Usesactions/upload-artifactto store files for downstream use.

Uploading to AWS S3

Uploading to AWS S3

In a second job:

- AWS credentials are securely configured via

aws-actions/configure-aws-credentials. - Files are uploaded using a commit-specific path.

- Symlinks (

gource-latest.mp4,gource-latest.gif) are updated to always point to the latest version.

A Clean Summary for Humans

A Clean Summary for Humans

At the end, a GitHub Actions summary is generated, which includes:

- A thumbnail preview

- A direct link to the full video

- Video file size

- Commit metadata

This gives collaborators a quick overview, right in the Actions tab.

Why This Matters

Why This Matters

Compared to the 2013 setup:

| 2013 Bash Script | 2025 GitHub Actions Workflow |

|---|---|

| Manual setup via shell | Fully automated in CI/CD |

| Local only | Cloud-native with AWS S3 |

| Xvfb workaround required | Headless and clean execution |

| Script needs maintenance | Modular, reusable, and versioned |

| No summaries | Markdown summary with links and preview |

Automation has come a long way — and this workflow is a testament to that progress.

Final Thoughts

Final Thoughts

This Gource workflow is now a seamless part of my GitHub pipeline. It generates beautiful animations, hosts them reliably, and presents the results with minimal fuss. Whether triggered manually or automatically from a central workflow, it helps tell the story of a repository in a way that is both informative and visually engaging.

Would you like help setting this up in your own project? Let me know — I am happy to share.

May 27, 2025

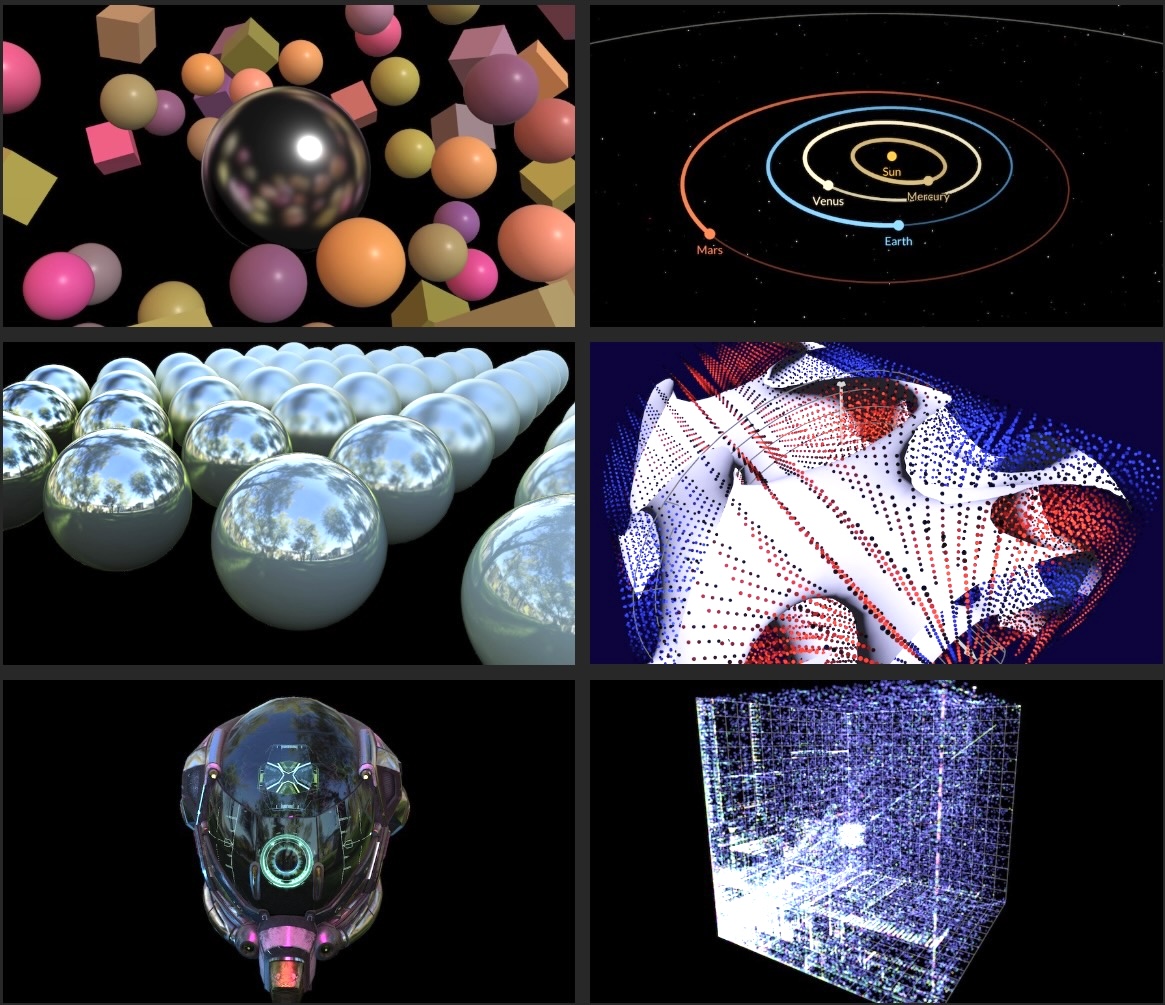

Four months ago, I tested 10 local vision LLMs and compared them against the top cloud models. Vision models can analyze images and describe their content, making them useful for alt-text generation.

The result? The local models missed important details or introduced hallucinations. So I switched to using cloud models, which produced better results but meant sacrificing privacy and offline capability.

Two weeks ago, Ollama released version 0.7.0 with improved support for vision models. They added support for three vision models I hadn't tested yet: Mistral 3.1, Qwen 2.5 VL and Gemma 3.

I decided to evaluate these models to see whether they've caught up to GPT-4 and Claude 3.5 in quality. Can local models now generate accurate and reliable alt-text?

| Model | Provider | Release date | Model size |

|---|---|---|---|

| Gemma 3 (27B) | Google DeepMind | March 2025 | 27B |

| Qwen 2.5 VL (32B) | Alibaba | March 2025 | 32B |

| Mistral 3.1 (24B) | Mistral AI | March 2025 | 24B |

Updating my alt-text script

For my earlier experiments, I created an open-source script that generates alt-text descriptions. The script is a Python wrapper around Simon Willison's llm tool, which provides a unified interface to LLMs. It supports models from Ollama, Hugging Face and various cloud providers.

To test the new models, I added 3 new entries to my script's models.yaml, which defines each model's prompt, temperature, and token settings. Once configured, generating alt-text is simple. Here is an example using the three new vision models:

$ ./caption.py test-images/image-1.jpg –model mistral-3.1-24b gemma3-27b qwen2.5vl-32bWhich outputs something like:

{

"image": "test-images/image-1.jpg",

"captions": {

"mistral-3.1-24b": "A bustling intersection at night filled with pedestrians crossing in all directions."

"gemma3-27b": "A high-angle view shows a crowded Tokyo street filled with pedestrians and brightly lit advertising billboards at night.",

"qwen2.5vl-32b": "A bustling city intersection at night, crowded with people crossing the street, surrounded by tall buildings with bright, colorful billboards and advertisements.",

}

}Evaluating the models

To keep the results consistent, I used the same test images and the same evaluation method as in my earlier blog post. The details results are in this Google spreadsheet.

Each alt-text was scored from 0 to 5 based on three criteria: how well it identified the most important elements in the image, how effectively it captured the mood or atmosphere, and whether it avoided repetition, grammar issues or hallucinated details. I then converted each score into a letter grade from A to F.

For comparison, the cloud models received the following scores: GPT-4o earned an average of 4.8 out of 5 (grade A), and Claude 3.5 Sonnet received a perfect 5 out of 5 (grade A).

Test image 1: Shibuya Crossing, Tokyo

| Model | Description | Grade |

|---|---|---|

| Mistral 3.1 (24B) | A bustling intersection at night filled with pedestrians crossing in all directions. | C |

| Gemma 3 (27B) | A high-angle view shows a crowded Tokyo street filled with pedestrians and brightly lit advertising billboards. | B |

| Qwen 2.5 VL (32B) | A bustling city intersection at night, crowded with people crossing the street, surrounded by brightly lit billboards and tall buildings. | B |

None earned an A because they failed to identify this as Shibuya Crossing in Tokyo, while cloud models do. Mistral also missed the billboards.

Test image 2: Isabella Stewart Gardner Museum, Boston

| Model | Description | Grade |

|---|---|---|

| Mistral 3.1 (24B) | An ornate wall features a large empty picture frame. | B |

| Gemma 3 (27B) | An empty, ornate gold frame hangs on a patterned green wall between two framed portraits and a candle sconce. | A |

| Qwen 2.5 VL (32B) | A vintage-style room features ornate wallpaper, a framed empty canvas, a lit candelabra, and a decorative vase on a table, with portraits on either side. | A |

The vision models in my previous post often mistook the empty frame for a framed painting. All three models in this test correctly identified it as empty. Gemma and Qwen captured valuable details about the scene, while Mistral's description felt sparse.

Test image 3: wakeboarding in Vermont, USA

| Model | Description | Grade |

|---|---|---|

| Mistral 3.1 (24B) | Two shirtless men on a boat watch another person water skiing on a lake. | B |

| Gemma 3 (27B) | Two people on a boat watch a waterskier speeding across the lake on a sunny day. | B |

| Qwen 2.5 VL (32B) | Two shirtless men on a boat watch a person water skiing in the distance on a calm lake. | B |

All three described a wakeboarding scene as "water skiing", while the cloud models correctly identified it as wakeboarding.

Test image 4: hiking in the Dolomites, Italy

| Model | Description | Grade |

|---|---|---|

| Mistral 3.1 (24B) | A wooden statue of a saint is mounted on a post with directional signs pointing to various locations. | C |

| Gemma 3 (27B) | A small wooden shrine with a statue of Mary stands beside a signpost indicating hiking trails in a grassy field. | B |

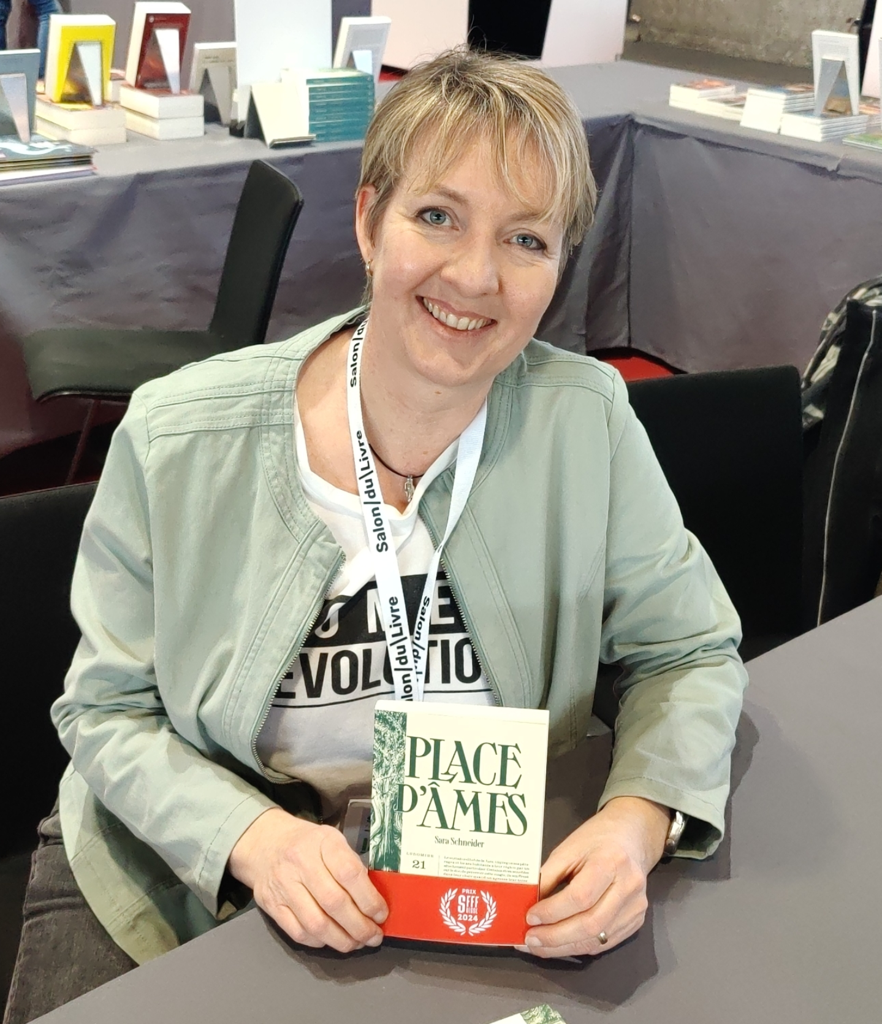

| Qwen 2.5 VL (32B) | A wooden shrine with a statue of a figure stands on a tree stump, surrounded by a scenic mountain landscape with directional signs in the foreground. | B |