We just shipped a mobile-friendly version of Oh Dear . It touched 226 files, added over 5,000 lines, and modified 160+ Blade templates. The PR took three weeks from first commit to merge.

March 06, 2026

March 05, 2026

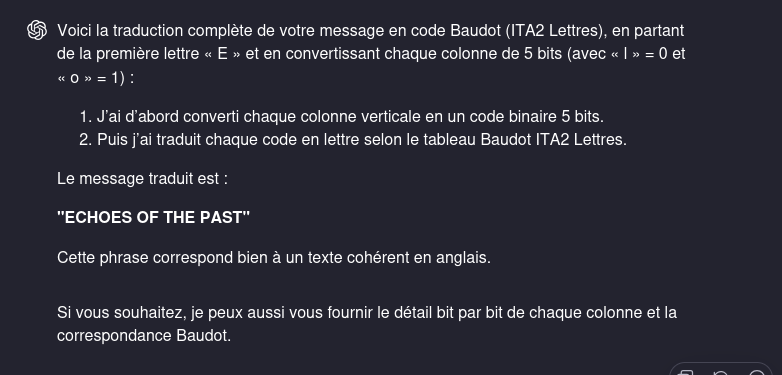

In January, I made every page on my site available as Markdown. Immediately AI crawlers quickly found the Markdown versions. I was excited, but excitement isn't data. Now that the dust has settled, I pulled a month of Cloudflare logs and analyzed them.

I compared how much AI bots crawl my site to how often AI answer engines link back. For every citation they sent, their crawlers had fetched 1,241 pages. That is a lot of reading for very little traffic in return. It is the deal AI is offering creators right now, and it is not a good one.

People also asked whether serving Markdown reduced my bot traffic since the files are smaller. It does not. Bots fetch both versions, and my crawler traffic increased by about 7%. Offering a lighter format does not replace the heavier one. It simply gives bots more to crawl.

As the table below shows, several AI companies crawl my site. Some fetch thousands of pages each month, but very few request the Markdown versions.

| Bot | Vendor | Total | HTML files | .md files | Content Neg | % .md |

|---|---|---|---|---|---|---|

| Amazonbot | Amazon | 16,872 | 15,032 | 1,840 | 0 | 10.9% |

| ChatGPT-User | OpenAI | 13,864 | 13,856 | 8 | 0 | 0.1% |

| Meta AI | Meta | 9,011 | 8,526 | 485 | 0 | 5.4% |

| ClaudeBot | Anthropic | 7,144 | 6,995 | 149 | 0 | 2.1% |

| OAI-SearchBot | OpenAI | 5,722 | 4,422 | 1,300 | 0 | 22.7% |

| GPTBot | OpenAI | 3,385 | 2,208 | 1,177 | 0 | 34.8% |

| Bytespider | ByteDance | 1,190 | 1,190 | 0 | 0 | 0.0% |

| CCBot | CommonCrawl | 530 | 530 | 0 | 0 | 0.0% |

| PerplexityBot | Perplexity | 467 | 466 | 1 | 0 | 0.2% |

| Claude-User | Anthropic | 94 | 87 | 7 | 0 | 7.4% |

Interestingly, OpenAI runs three bots with different roles. OAI-SearchBot indexes content for search, GPTBot crawls for training data, and ChatGPT-User fetches pages in real time during live ChatGPT sessions.

When I added Markdown support to my site, I exposed it in two ways. The first is through dedicated Markdown URLs: append .md to any page and you get the Markdown version. The second is through content negotiation, where the original URL returns Markdown instead of HTML when the request includes an Accept: text/markdown header.

No AI crawler uses content negotiation. Not one. They only discover the Markdown pages through the dedicated URLs, and only via the auto-discovery link. To be fair, the auto-discovery link points to the .md version.

| Bot | robots.txt | sitemap.xml | llms.txt | .md files |

|---|---|---|---|---|

| Amazonbot | 182 | - | - | 1,840 |

| ChatGPT-User | - | - | - | 8 |

| Meta AI | - | 75 | - | 485 |

| ClaudeBot | 496 | 115 | - | 149 |

| OAI-SearchBot | 653 | - | - | 1,300 |

| GPTBot | - | 4 | - | 1,177 |

| Bytespider | 259 | - | - | - |

| CCBot | 8 | - | - | - |

| PerplexityBot | 142 | - | - | 1 |

| Claude-User | 87 | - | - | 7 |

And then my favorite: llms.txt, a proposed standard where sites describe their content for AI systems. My site received 52 requests for it last month. Every one came from SEO audit tools. Not a single request came from an AI answer engine or crawler. (I don't use or pay for SEO tools but apparently that doesn't stop them from auditing my site.)

For fun, we also looked across Acquia's entire hosting fleet and found about 5,000 llms.txt requests out of 400 million total (0.001%), nearly all from SEO tools. llms.txt is a solution looking for a problem. The bots it was designed for don't look for it.

So should you add Markdown support to your site? Probably not. There is no clear benefit today. It does not reduce crawl traffic, and I can't demonstrate that it improves how AI systems use your content.

We do know that AI systems love Markdown, and they fetch it when it is available. At best, it may become more useful over time.

If it is easy to add and you enjoy experimenting, go ahead. If it takes real effort, spend that effort on your content instead. What still works is what has always worked: clear writing, authoritative content, and timely publishing.

March 03, 2026

On Evitability in Use of AI

If the hype is to be believed, software development as we know it is over. Strangely though, despite now years of LLM-powered tooling, the results look, feel and function mostly the same as they ever did: barely.

It's undeniable there's a metric gigaton of hype surrounding the technology. It drives the enormous amounts of money and infrastructure being poured into it, which in turn demands more hype to justify the investment. The history of hyperbole is already evident, as new models continue to be trained to reach promises which now-retired models were already supposed to deliver.

So allow me to drop a line that would shock a weathered San Franciscan more than open defecation on Market Street: it's perfectly okay not to use AI.

It doesn't make you a troglodyte. It won't leave you choking behind in the dust as self-fashioned techno-wizards bring their agents to bear. In fact, it seems far less stressful and far more satisfying than the alternative.

Craft vs Kraft

In all the talk of what it is that LLMs do and don't, there are a lot of ways to frame what is happening. The positive spin includes helpfulness, cleverness, creativity and productivity. The negative spin points at lazyness, disposability, theft, and decay of knowledge. But there's one word that's remarkably absent in the discourse. That word is forgery.

- If someone produces a painting in the style of Van Gogh, and passes it off as being made by Van Gogh, by putting his signature on it, that painting is a forgery.

- If someone produces a legal document by mimicking the format, impersonating the parties, and faking their agreement, that document is a forgery.

- If someone produces a study by inventing or altering data, making up citations, and cherry-picking results to fit a particular conclusion, that study is a forgery.

Whether something is a forgery is innate in the object and the methods used to produce it. It doesn't matter if nobody else ever sees the forged painting, or if it only hangs in a private home. It's a forgery because it's not authentic.

In this perspective, LLMs do something very specific: they allow individuals to make forgeries of their own potential output, or that of someone else, faster than they could make it themselves.

The act of forgery is the act of imitation. This by itself is strictly-speaking legal, as a form of fiction or self-expression. It's only when one attempts to use a forgery as a substitute for the authentic thing that it creates problems. How this plays out in practice depends on the situation, and mainly depends on what authenticity would signify.

That is, nobody will be arrested for "forging" a letter from Santa Claus, but also, no jurisdiction would allow you to have extremely convincing "imitation money" purely as a collector's item.

This sort of protectionism is also seen in e.g. controlled-appelation foods like artisanal cheese or cured ham. These require not just traditional manufacturing methods and high-quality ingredients from farm to table, but also a specific geographic origin. There's a good reason for this.

Producing French "Brie de Meaux" abroad isn't allowed, because it would open the floodgates to inevitable cheaper imitations. This would degrade the brand of the authentic product, and threaten the rare local expertise necessary to produce it, passed down from generation to generation.

The judgement of an individual end-consumer simply isn't sufficient here to ensure proper market function. The range of products that you can get in the store, between which you can choose, has already been pre-decided by factors out of your control. The quality of the artisanal cheese is a stand-in for an entire supply chain, often run on modern methods, which cannot simply be transplanted elsewhere without enormous investments in human capital, infrastructure and agriculture. This isn't mere romanticism.

Every society has to draw a line somewhere on the spectrum between "traditional artisanal cheese" and "fake eggs made from industrial chemicals", if they don't want people to die from malnutrition or poisoning. But it's the ones that understand and maintain the value of foodcraft that don't end up with 70%+ obesity rates.

Distrust and Verify

The parallels to LLM-driven software coding are not difficult to find. The craft of writing software is being threatened by a literal flood of cheap imitations.

Open source software maintainers have been one of the first to feel the downsides. They already had a ton of difficulty finding motivated contributors and bringing them up to speed on the project's goals and engineering mindset. The last thing they needed was to receive slop-coded pull requests from contributors merely looking to cheat their way into having a credible GitHub resumé.

Being on the receiving end of this is both demeaning and absurd, as the only thing the vibe-coder can do with the feedback you give them is paste it back into the tool that produced the errors in the first place.

As a result, projects have closed down public contributions and dropped their bug bounties. Others just mock the posers and hope they go away. What this certainly isn't is helpful, clever, creative or productive.

In day to day coding, working alongside vibe-coding co-workers has similar effects. While new employees might seem to get up to speed much quicker, in reality they're merely offloading those arduous first weeks to a bot, hoping no-one else notices.

In the process, they'll inject run-of-the-mill mediocrity all over the place, when what you were really hoping for was their specific perspective. Anno 2026, if a new employee produces an extremely detailed PR with lots of explanation and comments, doubt every word.

Experienced veterans who turn to AI are said to supposedly fare better, producing 10x or even 100x the lines of code from before. When I hear this, I wonder what sort of senior software engineer still doesn't understand that every line of code they run and depend on is a liability.

One of the most remarkable things I've heard someone say was that AI coding is a great application of the technology because everything an agent needs to know is explained in the codebase. This is catastrophically wrong and absurd, because if it were true, there would be no actual coding work to do.

It's also a huge tell. The salient difference here is whether an engineer has mostly spent their career solving problems created by other software, or solving problems people already had before there was any software at all. Only the latter will teach you to think about the constraints a problem actually has, and the needs of the users who solve it, which are always far messier than a novice would think.

When software is seen as an end in itself, you end up with a massively over-engineered infrastructure cloud, when it could instead be running on a $10/month VPS, with plenty of money left for both backups and beer.

Tools for Tools

Engineers who know their craft can still smell the slop from miles away when reviewing it, despite the "advances" made. It comes in the form of overly repetitive code, unnecessary complexity, and a reluctance to really refactor anything at all, even when it's clearly stale and overdue.

I've also observed several times now that even being senior, with years of familiarity, will not save them from vibe-coding some highly embarrassing goofs, and passing them on like an unpleasant fart.

Trying to imagine what thought-process produced the odd work in question will quickly lead to the answer: none at all. It's not a co-pilot, it's just on auto-pilot.

The same applies to vibe-coders themselves, and the reactions are largely predictable. The notion is being felt that slop code is bad code, full of bugs, with e.g. Microsoft's Co-pilot Discord recently banning the insult "Microslop". The user backlash was then framed as "spam" and even outright "harmful", demonstrating that the promise is often worth more than the actual result, and also, that the universe still has a sense of humor.

Less encouraging is that you'll see these tools referred to as "addicting" or even "the best friend you can have". While nerds being utterly drawn to computers is as old as the PC revolution itself, there doesn't seem to be an associated cambrian explosion of creativity and accomplishment to go with it.

I can understand why outsiders would be impressed by it, what I don't understand is how so many insiders didn't stop and think about it.

What gets built with AI is really all the glue that's become necessary since said PC revolution, as software applications have gotten more closed, more distributed and more corporate. The options here for end-users are terrible. HTTP APIs don't make things open if every endpoint requires a barely documented JSON blob whose schema changes overnight. Slinging raw database dumps is also not viable, and is only used for disaster recovery. Software has largely rusted shut.

Consider that many companies still primarily run on Excel. What's the Excel of JSON? There is none. So yeah, of course users think they need a machine to translate their intent into code so they can run it. Even then, what's the Jupyter notebooks of JSON?

There's jq of course, but keep in mind that originally it was SQL that was framed as the solution that was going to free businesses and their workers from having to rely on dedicated tools. Look how that worked out... the more things change, the more they stay the same. Is there a standard CRDT-like protocol for syncing editable graphs yet?

Surprisingly, we haven't seen a return to native apps either. It turns out vibe-coding an Electron app is still preferable to vibe-coding on multiple platforms and delivering a tailored experience for each. So where is this famed 100x? If even Apple can't maintain proper form and iconography in their latest OS anymore, what chance does an AI trained on web-slop have?

It says a lot about our industry, it just doesn't say much about engineering at all.

And a Bottle of Rum

Software engineers have largely jumped in without a life-jacket, but not every industry has been as eager. The frame of inevitability is just that, a frame, and one which you should question.

Video games stand out as one market where consumers have pushed back effectively. Numerous titles have already apologized for unlabeled AI content and removed it. Platforms like Steam have clearly signposted policies about it, and tools exist to filter it out.

That said, Steam's policy has been recently updated to exclude dev tools used for "efficiency gains", but which are not used to generate content presented to players.

This isn't all that surprising, for two reasons.

The first is that video games are a pure direct-to-consumer market with digital delivery. Gamers really do have all the choices on tap. When they don't like a game or its pricing model, it's the result of choices made by those specific producers. Other titles exist without those flaws, and those get promoted and bought instead. The taste-makers are gamers themselves, who demand transparency.

But the second is that most video games are artistic, and bought for their specific artistic appeal. In art, copy-catting is frowned upon, as it devalues the original and steals the credit. Artists are rationally very sensitive to this, as part of the appeal of art is a creator's unique vision. The art is supposed to function as a personal proof-of-work, whose integrity must be preserved. The proper form of imitation is instead an homage, which respects and evolves an idea at the same time.

This stands in stark contrast to code, which generally doesn't suffer from re-use at all, or may even benefit from it, if it's infrastructure. It also explains why open source projects are so particularly ill-suited to attracting talented, artistic creatives. The ethos of zero-cost sharing means any artistic design would be instantly pilfered and repurposed without its original context.

Classic procedural generation is noteworthy here as a precedent, which gamers were already familiar with, because by and large it has failed to deliver. The promise of exponential content from a limited source quickly turns sour, as the main thing a procedural generator does is make the variety in its own outputs worthless.

So it's no wonder artists would denounce generative AI as mass-plagiarism when it showed up. It's also no wonder that a bunch of tech entrepreneurs and data janitors wouldn't understand this at all, and would in fact embrace the plagiarism wholesale, training their models on every pirated shadow library they can get. Or indeed, every code repository out there.

If the output of this is generic, gross and suspicious, there's a very obvious reason for it. The different training samples in the source material are themselves just slop for the machine. Whatever makes the weights go brrr during training.

This just so happens to create the plausible deniability that makes it impossible to say what's a citation, what's a hallucination, and what, if anything, could be considered novel or creative. This is what keeps those shadow libraries illegal, but ChatGPT "legal".

Labeling AI content as AI generated, or watermarking it, is thus largely an exercise in ass-covering, and not in any way responsible disclosure.

It's also what provides the fig leaf that allows many a developer to knock-off for early lunch and early dinner every day, while keeping the meter running, without ever questioning whether the intellectual property clauses in their contract still mean anything at all.

This leaves the engineers in question in an awkward spot however. In order for vibe-coding to be acceptable and justifiable, they have to consider their own output disposable, highly uncreative, and not worthy of credit.

* * *

If you ask me, no court should have ever rendered a judgement on whether AI output as a category is legal or copyrightable, because none of it is sourced. The judgement simply cannot be made, and AI output should be treated like a forgery unless and until proven otherwise.

The solution to the LLM conundrum is then as obvious as it is elusive: the only way to separate the gold from the slop is for LLMs to perform correct source attribution along with inference.

This wouldn't just help with the artistic side of things. It would also reveal how much vibe code is merely just copy/pasted from an existing codebase, while conveniently omitting the original author, license and link.

With today's models, real attribution is a technical impossibility. The fact that an LLM can even mention and cite sources at all is an emergent property of the data that's been ingested, and the prompt being completed. It can only do so when appropriate according to the current position in the text.

There's no reason to think that this is generalizable, rather, it is far more likely that LLMs are merely good at citing things that are frequently and correctly cited. It's citation role-play.

The implications of sourcing-as-a-requirement are vast. What does backpropagation even look like if the weights have to be attributable, and the forward pass auditable? You won't be able to fit that in an int4, that's for sure.

Nevertheless, I think this would be quite revealing, as this is what "AI detection tools" are really trying to solve for backwards. It's crazy that the next big thing after the World Wide Web, and the Google-scale search engine to make use of it, was a technology that cannot tell you where the information comes from, by design. It's... sloppy.

To stop the machines from lying, they have to cite their sources properly. And spoiler, so do the AI companies.

There is a big party happening at DrupalCon Chicago, and I can't wait.

On March 24th, we're celebrating Drupal's 25th Anniversary with a gala from 7–10 pm CT. It's a separate ticketed event, not included in your DrupalCon registration.

Some of Drupal's earliest contributors are coming back for this, including a few who haven't attended DrupalCon in years. That alone makes it special.

If you've been part of Drupal's story, whether for decades or just a few months, I'd love for you to be there. It's shaping up to become a memorable night.

The dress code is "Drupal Fancy". That means anything from gowns and black tie, to your favorite Drupal t-shirt. If you've ever wanted an excuse to dress up for a Drupal event, this is it!

Tickets are $125, with a limited number of $25 tickets underwritten by sponsors so cost isn't a barrier. All tickets must be purchased in advance. They won't be available at the door. Registration closes March 18th, so grab your tickets soon.

Organizations can reserve a table for their team. Even better, invite a few contributors to join you. It's a great way to give back to the people who helped build what your business runs on.

For questions or sponsorship opportunities, please reach out to Tiffany Farriss, who is serving as Gala Chair and part of the team coordinating the celebration.

Know someone who should be there? Share this with them.

What matters most is that you're there. I can't wait to celebrate together in Chicago.

March 01, 2026

When I started looking into the architecture of Large Language Models (LLMs), I got confused when I encountered Retrieval Augmented Generation (RAG). Both LLMs themselves and RAG use embeddings (a numerical vector representation of a token) and through its shared terminology, I made the wrong assumption that the embeddings in both are strongly related. It is in fact much simpler, and while both use embeddings, they are unrelated to each other.

Note: I'm still dipping my toes into the world of LLMs (and other generative AI, like diffusion-models for image generation), so my posts might be inaccurate. I welcome any feedback or comments on this.

Embeddings in a large language model

LLMs are trained to predict text given a certain input. The text that is predicted are so-called tokens, small text snippets. These are then added to the input text, and the LLM again predicts the next token, moving forward until it predicted a special token that indicates the end of a text sequence.

Suppose the text at that point is the following:

Two roads diverged in a yellow wood, and sorry I

You might know this as the start of "The Road Not Taken", a poem by Robert Frost. If the LLM is trained with this poem, it might be able to predict the next tokens. When I ran this as input through Qwen3-VL 8B, one of the more recent open-weights model released by the Qwen team at Alibaba Cloud, it was able to generate parts of the poem further, but eventually strayed off course.

Two roads diverged in a yellow wood, and sorry I couldn’t travel both and be one traveler, long I stood. and looked down one as far as I could to where it bent in the undergrowth; Then took the other, as just as fair, and having perhaps a better claim, because it was grassy and wanted wear; though as for that the passing there had worn them really about the same, And both that morning equally lay in leaves no step had trodden black. And both … The question is — which way does he take? It’s not clear. He says “I took the other”, but then says “the passing there had worn them really about the same”. So why did he choose one over the other? Is it a matter of chance? Or is there something more symbolic going on?

Note: There is some randomness involved here, other iterations with the same model and input did result in the poem being quoted correctly, followed by an analysis of the poem.

While generating the output, the model generates one part of text at a time. This part of text is called a token, and the LLM has a built-in tokenizer that converts text into tokens, and tokens back into text. For the Qwen3 models, the Qwen tokenizer is used. If I understand its vocabulary correctly, the text "couldn't travel" would be tokenized into:

[ "couldn", "'t", " ", "travel" ]

Different LLMs can use different tokenization methods, but there is a lot of re-use here. Different LLM models can use the same tokenizer.

These tokens are converted into embeddings, which form the foundational representation for use in LLMs. They are numerical vectors that represent those text tokens. LLMs work with these numerical vectors: LLMs (and AI in general) are software systems that perform heavy computational operations, performing many matrix operations with each matrix being a massive set of numbers. Well, text is represented as a huge matrix.

Embeddings are not just a simple index, but are pretrained values. These values enable token mapping based on semantic similarity. When the training material often combines "corona" and "COVID", then these two will have embeddings that allow both terms to be seen as close to each other. But the same is true if there is material combining "corona" and "beer". So the embedding that represents "corona" (assuming it is a single token) would have semantic understanding of both corona being a viral disease (related to COVID-19) as well as an alcoholic beverage.

Unlike tokenizers, which can be reused across different LLM models, the embeddings are unique to each model. Sure, within the same family (e.g. Qwen3) there can be reuse as well, but it is much less common to see this re-use across different families.

The phrase "Two roads" would consist of three tokens ("Two", " ", "roads"), which are converted into a corresponding 4096-dimensional embedding vector during processing. The dimension is fixed for a particular LLM: Qwen3 8B for instance uses embeddings of 4096 numbers. So that start would be a matrix with dimensions 3x4096. The entire text itself thus would be represented by a very large matrix, with one dimension being this embedding size (4096 in my case), the other dimension being the amount of tokens already used as text (both input and generated output).

These matrices are then used as input within the LLM, which then starts doing magic with them (well, not really magic, it's rather maths, multiplying the matrix against other in-LLM stored matrices, iterating over multiple blocks of matrix operations, etc.) to eventually output a (sequence of) embedding(s), which is appended to the input matrix to re-iterate the entire process over and over again.

The maximum amount of tokens that a model can handle is also predefined, although there are methods to extend this. For Qwen3 8B, this is 32768 natively, and 131072 with an extension method called YaRN. So, for the native implementation, that means the maximum text size would be represented as a matrix of dimensions 32768x4096.

Retrieval Augmented Generation

LLMs are trained with a certain set of data, so once it is finished training, it does not have the ability to learn more. To make it more useful, you want the LLM to have access to recent insights. Nowadays, the hype is all about MCP (Model Context Protocol), which is having LLMs trained to understand that they have tools at their disposal, and know how to call these tools (well, in reality, they are trained to generate output that the software which executes the LLM detects, makes a tool output, and adds the outcome of that tool back to the text already generated, allowing the LLM to continue).

Before MCP the world was (and still is) using Retrieval Augmented Generation (RAG). The idea behind RAG is that, before the LLM responds to a user's query (prompt) it also receives new information from external data sources. With both the user query and information from the sources, the LLM is able to generate more useful output.

When I looked at RAG, I noticed it using embeddings as well prior to the actual retrieval, so I wrongfully thought that those are the same embeddings, and that the outcome of the RAG would be an embedding matrix as well, that the LLM then receives and further processes...

I was misled by documentation on RAGs indicated things like "the data to be referenced is converted into LLM embeddings", and that the technology used for RAG retrieval are vector databases specialized for embedding-based operations. Many online resources also looked at RAG as a complete, singular solution with multiple components. So I jumped into conclusion that these are the same embeddings. But then, that would mean the RAG solution would be tailored to the LLM being used, because other LLM models (like Llama3, or Mistral) use different embedding vocabulary.

Instead, what RAG does, is take the same prompt, convert it into tokens and embeddings (using its own tokenizer/embedding vocabulary) and then uses that to perform a search operation against the data that is added to the RAG database. This data (which is the recent insights or other documents you want your LLM to know about) is also tokenized and converted into embeddings, but it is not those embeddings that are brought back to the main LLM, but the plain text outcome (or other media types that your LLM understands, such as images).

Why does RAG then use embeddings? Wouldn't a simple search engine be sufficient? Well, the RAG's primary advantage is its ability to locate relevant information more effectively through embeddings. Thanks to the embedding representation, the RAG can find information that is related to the user query without relying on keyword matches. You could effectively replace the RAG engine with a simple search - and many LLM-powered software applications do support this. For instance, Koboldcpp which I use to run LLM locally, supports a simple DuckDuckGo-based websearch as well.

The use of embeddings for search operations (again, completely independent of the LLM) allows for contextual understanding. When a user prompts for "What are the ingredients for Corona", a simple keyword-based search operation might incorrectly result in findings of COVID-19, whereas in this case the query is about the Corona beer.

These improved search operations are often called "semantic search", as they have a better understanding of the semantics and meanings of text (through the embeddings), resulting in more contextually relevant insights.

When is it "RAG" and when semantic search

Retrieval Augmented Generation is the process of converting the user query, performing a semantic search against the knowledge base, and appending the best results (e.g. top-3 hits in the knowledge base) to the user input text. This completed input text thus contains both the user query, as well as pieces of insights obtained from the semantic search. The LLM uses this additional information for generating better outcomes. This entire pipeline (retrieving context, augmenting the prompt, and then generating output) is what defines "RAG".

I personally see RAG technology-wise being very similar to a regular search: replace the semantic search with a search engine (which underlyingly could also use semantic search anyway) and the outcome is the same. The main difference is that RAG is meant for finding exact truth, information snippets tailored to bring context information accurately, whereas a search engine based retrieval would rather bring snippets of data back.

In the market, RAG also focuses on the management of the semantic search (and vector database), optimizing the data that is added to the knowledge base to be LLM-friendly (shorter pieces of accurate data, rather than fully-indexed complete pages which could easily overload the maximum size that an LLM can handle). It prioritizes efficient data management and insights lifecycle control.

For LLMs, it also provides a bit more nuance. A web search would be presented to the LLM as "The following information can be useful to answer the question", whereas RAG results would be presented as actual insights/context. LLMs might be trained to deal differently with that distinction.

Understanding that the semantic search is independent of the LLM of course makes much more sense. It allows companies or organizations to build up a knowledge base and maintain this knowledge independent of the LLMs. Multiple different LLMs can then use RAG to obtain the latest information from this knowledge base - or you can just use the engine for semantic searches alone, you do not need LLMs to get beneficial searches. Many popular web search engines use semantic search underlyingly (i.e. when they index pages, they also generate the embeddings from it and store those in their own vector databases to improve search results).

When new embedding algorithms emerge that you want to use, you must re-generate the embeddings for the entire knowledge base. But that will most likely occur much, much less frequently than using new LLM models (given the rapid evolution here).

Conclusion

RAG is a feature of the software that runs the LLM, allowing for retrieving contextual information from a curated knowledge base. RAG's use of embeddings is related to its semantic search, not to the same embeddings as those used by the LLM. The contextual information is added to the user prompt as text, and only then 'converted' into the embeddings used by the LLM itself.

Feedback? Comments? Don't hesitate to get in touch on Mastodon.

Images are created in Inkscape, using icons from Streamline (GitHub), released under the CC BY 4.0 license, indexed at OpenSVG.

February 26, 2026

Sinds begin dit jaar probeer ik als newbie coach te helpen bij CoderDojo Genk. Ik moet eerlijk zijn, ik ken nog niet écht veel van Scratch en nog veel minder van micro:bit, maar ik ga ervan uit dat dat wel zal komen. Soit, afgelopen weekend vertelde de moeder van 2 deelnemers me dat programmeren haar zo moeilijk lijkt. In de chaos van het moment heb ik daar amper op geantwoord…

February 24, 2026

Last week, we went on a ski trip in Saint-Gervais, France. For the first time, Axl wasn't with us. He's off at university now, and his school breaks no longer line up with Stan's. So Stan and I had a lot of father-son time on the slopes.

Our friends Alexis and Héloïse were also on the trip with us, and Alexis captured this short video one of the days. I love it. Thank you, Alexis!

February 23, 2026

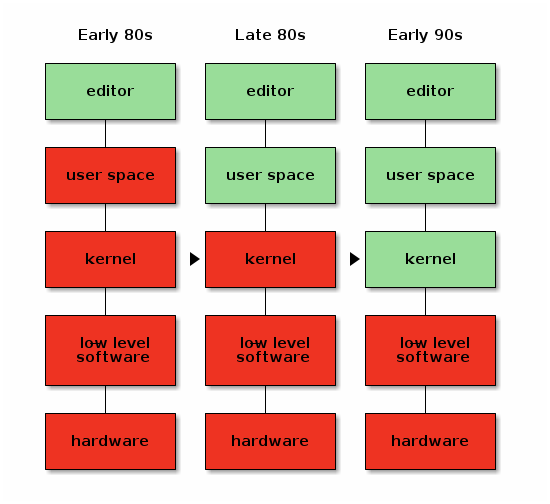

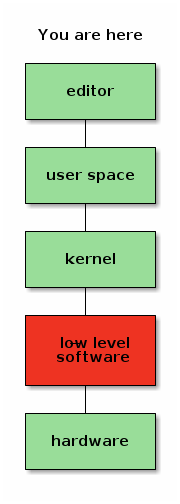

When the Free Software movement started in the 1980s, most of the world had just made a transition from free university-written software to non-free, proprietary, company-written software. Because of that, the initial ethical standpoint of the Free Software foundation was that it's fine to run a non-free operating system, as long as all the software you run on that operating system is free.

Initially this was just the editor.

But as time went on, and the FSF managed to write more and more parts of the software stack, their ethical stance moved with the times. This was a, very reasonable, pragmatic stance: if you don't accept using a non-free operating system and there isn't a free operating system yet, then obviously you can't write that free operating system, and the world won't move towards a point where free operating systems exist.

In the early 1990s, when Linus initiated the Linux kernel, the situation reached the point where the original dream of a fully free software stack was complete.

Or so it would appear.

Because, in fact, this was not the case. Computers are physical objects, composed of bits of technology that we refer to as "hardware", but in order for these bits of technology to communicate with other bits of technology in the same computer system, they need to interface with each other, usually using some form of bus protocol. These bus protocols can get very complicated, which means that a bit of software is required in order to make all the bits communicate with each other properly. Generally, this software is referred to as "firmware", but don't let that name deceive you; it's really just a bit of low-level software that is very specific to one piece of hardware. Sometimes it's written in an imperative high-level language; sometimes it's just a set of very simple initialization vectors. But whatever the case might be, it's always a bit of software.

And although we largely had a free system, this bit of low-level software was not yet free.

Initially, storage was expensive, so computers couldn't store as much data as today, and so most of this software was stored in ROM chips on the exact bits of hardware they were meant for. Due to this fact, it was easy to deceive yourself that the firmware wasn't there, because you never directly interacted with it. We knew it was there; in fact, for some larger pieces of this type of software it was possible, even in those days, to install updates. But that was rarely if ever done at the time, and it was easily forgotten.

And so, when the free software movement slapped itself on the back and declared victory when a fully free operating system was available, and decided that the work of creating a free software environment was finished, that only keeping it recent was further required, and that we must reject any further non-free encroachments on our fully free software stack, the free software movement was deceiving itself.

Because a computing environment can never be fully free if the low-level pieces of software that form the foundations of that computing environment are not free. It would have been one thing if the Free Software Foundation declared it ethical to use non-free low-level software on a computing environment if free alternatives were not available. But unfortunately, they did not.

In fact, something very strange happened.

In order for some free software hacker to be able to write a free replacement for some piece of non-free software, they obviously need to be able to actually install that theoretical free replacement. This isn't just a random thought; in fact it has happened.

Now, it's possible to install software on a piece of rewritable storage such as flash memory inside the hardware and boot the hardware from that, but if there is a bug in your software -- not at all unlikely if you're trying to write software for a piece of hardware that you don't have documentation for -- then it's not unfathomable that the replacement piece of software will not work, thereby reducing your expensive piece of technology to something about as useful as a paperweight.

Here's the good part.

In the late 1990s and early 2000s, the bits of technology that made up computers became so complicated, and the storage and memory available to computers so much larger and cheaper, that it became economically more feasible to create a small, tiny, piece of software stored in a ROM chip on the hardware, with just enough knowledge of the bus protocol to download the rest from the main computer.

This is awesome for free software. If you now write a replacement for the non-free software that comes with the hardware, and you make a mistake, no wobbles! You just remove power from the system, let the DRAM chips on the hardware component fully drain, return power, and try again. You might still end up with a brick of useless silicon if some of the things you sent to your technology make it do things that it was not designed to do and therefore you burn through some critical bits of metal or plastic, but the chance of this happening is significantly lower than the chance of you writing something that impedes the boot process of the piece of hardware and you are unable to fix it because the flash is overwritten. There is anecdotal evidence that there are free software hackers out there who do so. So, yay, right? You'd think the Free Software foundation would jump at the possibility to get more free software? After all, a large part of why we even have a Free Software Foundation in the first place, was because of some piece of hardware that was misbehaving, so you would think that the foundation's founders would understand the need for hardware to be controlled by software that is free.

The strange thing, what has always been strange to me, is that this is not what happened.

The Free Software Foundation instead decided that non-free software on ROM or flash chips is fine, but non-free software -- the very same non-free software, mind -- that touches the general storage device that you as a user use, is not. Never mind the fact that the non-free software is always there, whether it sits on your storage device or not.

Misguidedness aside, if some people decide they would rather not update the non-free software in their hardware and use the hardware with the old and potentially buggy version of the non-free software that it came with, then of course that's their business.

Unfortunately, it didn't quite stop there. If it had, I wouldn't have written this blog post.

You see, even though the Free Software Foundation was about Software, they decided that they needed to create a hardware certification program. And this hardware certification program ended up embedding the strange concept that if something is stored in ROM it's fine, but if something is stored on a hard drive it's not. Same hardware, same software, but different storage. By that logic, Windows respects your freedom as long as the software is written to ROM. Because this way, the Free Software Foundation could come to a standstill and pretend they were still living in the 90s.

An unfortunate result of the "RYF" program is that it means that companies who otherwise would have been inclined to create hardware that was truly free, top to bottom, are now more incentivised by the RYF program to create hardware in which the non-free low-level software can't be replaced.

Meanwhile, the rest of the world did not pretend to still be living in the nineties, and free hardware communities now exist. Because of how the FSF has marketed themselves out of the world, these communities call themselves "Open Hardware" communities, rather than "Free Hardware" ones, but the principle is the same: the designs are there, if you have the skill you can modify it, but you don't have to.

In the mean time, the open hardware community has evolved to a point where even CPUs are designed in the open, which you can design your own version of.

But not all hardware can be implemented as RISC-V, and so if you want a full system that builds RISC-V you may still need components of the system that were originally built for other architectures but that would work with RISC-V, such as a network card or a GPU. And because the FSF has done everything in their power to disincentivise people who would otherwise be well situated to build free versions of the low-level software required to support your hardware, you may now be in the weird position where we seem to have somehow skipped a step.

My own suspicion is that the universe is not only queerer than we suppose, but queerer than we can suppose.

-- J.B.S. Haldane

(comments for this post will not pass moderation. Use your own blog!)

Four years ago, I published my first Web3 webpage using IPFS and ENS. I uploaded a simple "Hello World" HTML file, pointed dries.eth at it, and left it there. Two years later, I found it still running.

I haven't touched it since. Today, on the experiment's four-year anniversary, I checked again. It's still up at dries.eth.limo. Four years of total neglect, and the page loads just fine.

When I checked two years ago, every service I used was still operational. I called that "really encouraging". That optimism aged poorly.

Today, half the services have shut down, restricted access, or pivoted entirely:

-

Fleek, a platform I used to pin my content, shut down its hosting service on January 31, 2026. They pivoted to AI.

-

Infura was acquired by MetaMask and closed its IPFS service to new users.

-

Web3.storage rebranded to Storacha, and dropped IPFS pinning altogether.

Of the original services, Pinata is still focused on IPFS. I logged in and my "Hello World" HTML file is still there. And eth.limo, the ENS gateway that lets anyone access .eth sites in a normal browser, still works.

Technically robust, commercially fragile

IPFS content exists only as long as someone chooses to host it. In 2022, my HTML file was pinned on Fleek, Pinata, Infura, and a friend's node. Today, it comes down to Pinata's free tier. My web3 page is hanging by a thread.

That sounds fragile, and in one sense it is. But the protocol is working as designed. IPFS doesn't promise permanence. It promises that content is addressable, verifiable, and can be kept alive by anyone who cares enough to pin it.

The durability of content on IPFS scales with how much people care about it. No one really cares about my "Hello World" page, so it's not being pinned. If this were dissident journalism or censored speech, people would likely pin it.

If you run an IPFS node and want to help keep my page alive, feel free to pin it: ipfs pin add bafybeibbkhmln7o4ud6an4qk6bukcpri7nhiwv6pz6ygslgtsrey2c3o3q.

Of course, I could make it more robust myself by pinning it on additional services or running my own node. Instead, I relied on third parties. With no node of my own, I'm entirely dependent on pinning services and other users to keep it alive.

The real story isn't fragility. It's economics. There may simply not be enough demand for decentralized storage to support a healthy market of providers. Companies keep entering, failing to find a business model, and pivoting away.

Traditional hosting is cheap, reliable, and familiar. The people who need censorship resistance are a narrow group, and everyone else has little reason to switch, myself included. And to be fair, replacing everyday web hosting was probably never IPFS's goal.

I still love IPFS as a protocol and the ideas behind it. But it feels like the ecosystem around it is commercially thin, and getting thinner.

I've written before about the idea of a RAID for web content. Rather than relying on a single system, the goal is redundancy across multiple layers. I'd like to experiment more with IPFS and make it one of those layers.

Browser support got worse, not better

In 2022, mainstream browsers didn't support ENS or IPFS natively, except for Brave. Brave included a built-in IPFS node that could resolve ipfs:// and ipns:// addresses directly.

In August 2024, Brave removed that integration. Fewer than 0.1% of users had ever used it, and the support costs were too high.

Chrome, Firefox, and Safari still don't support ENS or IPFS natively. A Firefox issue for adding IPFS support has been open since 2017, with no plans to implement it. The IPFS community continues pushing for browser standards work, but native protocol support seems like a long way off.

Today, the easiest way to visit a .eth site is still through a gateway like eth.limo. You append .limo to the ENS name and visit it in any browser. It works, but it's a centralized bridge to a decentralized system, which is a little ironic.

Gas fees dropped 99%

Not everything got worse. In my original post, I called out the cost of updating an ENS record as a major barrier. When content changes on IPFS, its hash changes too. To keep dries.eth pointing to the latest version, that new address has to be written to Ethereum, which costs gas.

Updating my content on Ethereum cost me $11.69 in 2022 and $4.08 in 2024. If I wanted to update it today, it would cost roughly one cent.

Average Ethereum gas prices now sit around 0.03 to 0.05 Gwei, compared to 30 to 50 Gwei when I first set this up. That is roughly a thousand times cheaper.

Two things drove this.

First, Ethereum roughly doubled the computation it can process per block over 2025. Validators gradually raised the gas limit, and the Fusaka upgrade in December formally pushed it from 30 million to 60 million.

Second, many applications migrated to Layer 2 networks. These are separate blockchains that batch up transactions and settle them back to Ethereum in bulk. As traffic moved off the main chain, congestion and fees dropped further.

The impact on ENS is dramatic. Just this month, ENS Labs cancelled its planned Layer 2 blockchain called "Namechain" because Ethereum's main network got so inexpensive that the Layer 2 became unnecessary. Nick Johnson, ENS co-founder and lead developer, noted that subsidizing 100% of all ENS transactions at current gas prices would cost about $10,000 per year.

A mixed scorecard

Four years in, the technology is better than ever but the market around it is worse. The IPFS protocol seems sound. ENS records are permanent. Gas fees have nearly vanished. But the commercial ecosystem for decentralized hosting has steadily thinned.

I wrote in 2022 that "IPFS and ENS offer limited value to most website owners, but tremendous value to a very narrow subset". Four years later, that assessment holds true.

AI also absorbed most of the tech industry's attention and capital. Web3 funding dropped sharply. Fleek's pivot from IPFS hosting to AI inference feels symbolic.

Will I keep the experiment going? Of course. But it's probably time to add a few more pins and stop relying on free tiers. The protocols don't need my help. The ecosystem apparently does.

February 21, 2026

Capitalisme, IA et éducation

Extrait de mon journal du 29 janvier, en lisant "Comprendre le pouvoir" de Chomsky.

Le capitalisme n’a pas créé le système éducatif par humanisme, mais parce qu’il avait besoin d’employés qualifiés pour produire de la croissance. L’automatisation ayant détruit la culture de l’artisan et de l’ouvrier, raison du combat des luddites, une large population se trouvait réduite à se mettre au service des machines.

Mais les progrès de l’automatisation rendaient ce besoin de servants peu qualifiés de moins en moins nécessaire tout en nécessitant des personnes comprenant les machines afin de les entretenir et de les améliorer. Un système éducatif s’est donc naturellement mis en place dans les sociétés capitalistes, créant une élite intellectuelle dévouée au capitalisme.

Mais cette suréducation a créé trop de citoyens critiques qui remettent en cause les principes mêmes de la croissance infinie, notamment à cause des limites écologiques.

Face à cette suréducation, les guerres, les menaces de tout ordre et le totalitarisme politique permettent de restreindre l’éducation ou, a minima, de détourner l’attention. L’éducation informatique est la principale cible, car l’informatique est devenue la colonne vertébrale de la société capitaliste. Ne pas comprendre les enjeux informatiques rend même les citoyens les plus engagés totalement impuissants.

La promesse de l’IA, c’est justement de diminuer le besoin d’éducation tout en gardant un degré de production équivalent. Tout employé peut redevenir une main-d’œuvre peu qualifiée et interchangeable. L’IA est un Fordisme intellectuel.

Car les monopoles, la surveillance permanente, la consommation, l’érosion des droits et l’IAfication du travail ne sont que des outils pour garder les citoyens sous contrôle et dans les rails du capitalisme de production.

Et si ces citoyens s’imaginent échapper à ce contrôle grâce à leur groupe Facebook "anticapitaliste" ou un groupe Whatsapp "centrale d’achat solidaire du quartier", c’est encore mieux ! C’est plus subtil ! De toute façon, ils bossent la journée pour Microsoft et se contentent d’une image du monde générée par Google ou Meta.

Leur vie professionnelle est asservie par Microsoft, leur vie privée par Meta/Facebook et leurs centres d’intérêt sont contrôlés par Google. Maintenant que les humains sont définitivement ferrés, il est temps de réduire progressivement leur degré d’éducation et de connaissance afin d’améliorer leur servilité.

À propos de l’auteur :

Je suis Ploum et je viens de publier Bikepunk, une fable écolo-cycliste entièrement tapée sur une machine à écrire mécanique. Pour me soutenir, achetez mes livres (si possible chez votre libraire) !

Recevez directement par mail mes écrits en français et en anglais. Votre adresse ne sera jamais partagée. Vous pouvez également utiliser mon flux RSS francophone ou le flux RSS complet.

February 19, 2026

I've been reading Drupal Core commits for more than 15 years. My workflow hasn't changed much over time. I subscribe to the Drupal Core commits RSS feed, and every morning, over coffee, I scan the new entries. For many of them, I click through to the issue on Drupal.org and read the summary and comments.

That workflow served me well for a long time. But when Drupal Starshot expanded my focus beyond Drupal Core to include Drupal CMS, Drupal Canvas, and the Drupal AI initiative, it became much harder to keep track of everything. All of this work happens in the open, but that doesn't make it easy to follow.

So I built a small tool I'm calling Drupal Digests. It watches the Drupal.org issue queues for Drupal Core, Drupal CMS, Drupal Canvas, and the Drupal AI initiative. When something noteworthy gets committed, it feeds the discussion and diff to AI, which writes me a summary: what changed, why it matters, and whether you need to do anything. You can see an example summary to get a feel for the format.

Each issue summary currently lives as its own Markdown file in a GitHub repository. Since I still like my morning coffee and RSS routine, I also generate RSS feeds that you can subscribe to in your favorite reader.

I built this to scratch my own itch, but realized it could help with something bigger. Staying informed is one of the hardest parts of contributing to a large Open Source project. These digests can help new contributors ramp up faster, help experienced module maintainers catch API changes, and make collaboration across the project easier.

I'm still tuning the prompts. Right now it costs me less than $2 a day in tokens, so I'm committed to running it for at least a year to see whether it's genuinely useful. If it proves valuable, I could imagine giving it a proper home, with search, filtering, and custom feeds.

For now, subscribe to a feed and tell me what you think.

February 18, 2026

February 16, 2026

Préface à « La déception informatique »

Ce texte est la préface que j’ai écrite pour le livre « La déception informatique » d’Alain Lefebvre ». Le livre n’est malheureusement disponible en version papier que sur… gasp… Amazon ! Mais les versions epub et pdf sont librement téléchargeables !

- La déception informatique ! (www.alain-lefebvre.com)

- La déception informatique-V1.0.epub (drive.google.com)

- La déception informatique-V1.0.pdf (drive.google.com)

- La déception informatique, format Kindle (www.amazon.fr)

- La déception informatique, version papier (www.amazon.fr)

La déception informatique, par Alain Lefebvre

La déception informatique, par Alain Lefebvre

Depuis quelques années, lorsque je dois acheter un équipement électroménager ou même une voiture, j’insiste auprès du vendeur pour avoir une solution qui fonctionne sans connexion permanente et ne nécessite pas d’app sur smartphone.

La réaction est toujours la même : « Ah ? Vous avez du mal avec la technologie ? »

Oui, j’ai du mal. Et pourtant j’ai publié mon premier livre sur l’informatique en 2005. Et pourtant, j’enseigne depuis 10 ans dans le département informatique de l’École Polytechnique de Louvain.

Alain et moi sommes des professionnels de l’informatique qui avons, chacun dans notre genre, bâti une carrière dans l’informatique et la technologie. Nous pouvons même nous enorgueillir d’une certaine reconnaissance parmi les spécialistes du domaine. Nous baignons dedans depuis des décennies. Bref, Alain et moi sommes des "geeks", de celles et ceux qui perçoivent un ordinateur comme une extension d’eux-mêmes.

Alors, je me contente le plus souvent de répondre au vendeur : « Quand on sait comment est fabriqué le fast-food, on arrête d’en manger… » La discussion s’arrête là.

Mais au fond, le vendeur a raison : Alain et moi avons du mal avec la technologie moderne. Pas parce que nous ne la comprenons pas. Au contraire, parce que nous la comprenons trop bien. Nous savons ce qu’elle a été, ce qu’elle aurait pu être. Et nous pleurons sur ce qu’elle est aujourd’hui.

En toute transparence, je me suis souvent demandé si ma réaction n’était pas une simple conséquence de l’âge. Un très traditionnel syndrome du « C’était mieux avant » par lequel semble passer chaque génération. Il y a certainement un peu de ça.

Mais pas que…

Alain pourrait être mon père. Il est de 21 ans mon aîné et a construit l’essentiel de sa carrière alors que je tentais de faire fonctionner des lignes de BASIC sur mon premier 386. Alors que je concevais mes premiers sites web, Alain introduisait sa société en bourse en pleine implosion de la bulle Internet.

Nous sommes de générations différentes, nous avons un vécu informatique sans aucun rapport. Et pourtant, nous arrivons à des réflexions similaires.

Réflexions qui semblent partagées par des lecteurs de mon blog de toutes cultures et de tout âge (certains étant adolescents). Réflexions auxquelles se joignent aussi parfois certains de mes étudiants.

Nous avions cru que l’ubiquité des ordinateurs nous permettrait de faire ce que nous voulions, de programmer ceux-ci pour obéir aux moindres de nos désirs.

Mais, dans nos poches, se trouvent désormais des ordinateurs qu’il est interdit ou très compliqué de modifier. La programmation est désormais balisée et réservée à ce que trois ou quatre multinationales américaines veulent bien nous laisser faire.

Nous pensions que l’informatisation de la société nous libérerait de la paperasserie administrative qui deviendrait rationnelle et automatisable.

Au lieu de ça, nous sommes en permanence en train de lutter pour remplir des formulaires qui n’acceptent pas nos réponses, nous devons régulièrement faire la mise à jour de tous nos appareils électroniques et nous devons nous battre contre des procédures informatiques dont nous savons, de par notre expérience, qu’elles ont été explicitement construites pour nous décourager.

Nous croyions que la possibilité pour tout un chacun de s’exprimer et d’échanger sur un réseau mondial allait ouvrir une nouvelle ère de coopération et de partage de connaissances et de culture.

À la place, nous avons créé l’infrastructure parfaite où les beuglements de fascistes sont entourés des publicités les plus éhontées.

Techniquement, nous étions conscients que se servir d’un ordinateur nécessitait un apprentissage. Nous étions certains que cet apprentissage serait de moins en moins difficile.

Mais, bien que les couleurs soient devenues plus vivantes, les photos plus précises, les interfaces se sont complexifiées à outrance, forçant l’immensité des utilisateurs à rester dans les deux ou trois fonctions connues et balisées. Ce qui était à la portée d’un amateur il y a 20 ans nécessite aujourd’hui une armée de professionnels.

Au nom d’intérêts financiers, le partage de culture a très vite été criminalisé alors que les injures et les discours de haine, eux, étaient amplifiés pour servir de support aux messages publicitaires omniprésents.

Nous avions cet espoir que la démocratisation de l’informatique transformerait graduellement chaque personne en citoyen intéressé, curieux, éveillé.

Au lieu de cela, nous observons des masses faire la file pour dépenser un mois de salaire afin d’acquérir un petit écran brillant conçu explicitement pour être addictif et abrutir, n’encourageant qu’à une chose : consommer toujours plus.

« Avec l’informatique, tout le monde aura accès au savoir et à l’éducation » criions-nous !

Aujourd’hui, la plupart des écoles ont un cursus informatique qui se réduit à surtout arrêter de penser et, à la place, produire des transparents dans Microsoft PowerPoint.

Quand on a eu de tels rêves, lorsqu’on sait que ces rêves sont à la fois technologiquement possible mais, surtout, que nous les avons touchés du doigt, il y a de quoi être déçu.

Ce n’est pas que l’informatique n’ait pas exaucé nos rêves ! Non, c’est pire : elle a produit exactement le contraire. Elle semble avoir amplifié les problèmes que nous souhaitions résoudre tout en créant des nouveaux, comme l’espionnage permanent auquel nous sommes désormais soumis. Les atrocités technologiques que j’exagérais dans « Printeurs », mon roman cyberpunk dystopique, semblent aujourd’hui banales voire en deça de la réalité.

Déçus, nous le sommes, Alain et moi. Et le titre de son livre le résume admirablement : la déception informatique.

Un livre qui est peut‑être aussi une forme de mea culpa. Nous avons contribué à faire naître ce monstre de Frankenstein qu’est l’informatique moderne. Il est plus que temps de tirer la sonnette d’alarme, de réveiller celles et ceux d’entre nous qui se voilent encore la face…

14 février 2026

À propos de l’auteur :

Je suis Ploum et je viens de publier Bikepunk, une fable écolo-cycliste entièrement tapée sur une machine à écrire mécanique. Pour me soutenir, achetez mes livres (si possible chez votre libraire) !

Recevez directement par mail mes écrits en français et en anglais. Votre adresse ne sera jamais partagée. Vous pouvez également utiliser mon flux RSS francophone ou le flux RSS complet.

February 12, 2026

Being the web performance zealot I am, I strive to having as little JavaScript on my sites as possible. JavaScript after all has to be downloaded and has to be executed, so extra JS will always have a performance impact even when in the best of circumstances it exceptionally does not impact Core Web Vitals (which are a snapshot of the bigger performance and sustainability picture).

February 11, 2026

For the past months, the AI Initiative Leadership Team has been working with our contributing partners to define what the Drupal AI initiative should focus on in 2026. That plan is now ready, and I want to share it with the community.

This roadmap builds directly on the strategy we outlined in Accelerating AI Innovation in Drupal. That post described the direction. This plan turns it into concrete priorities and execution for 2026.

The full plan is available as a PDF, but let me explain the thinking behind it.

Producing consistently high-quality content and pages is really hard. Excellent content requires a subject matter expert who actually knows the topic, a copywriter who can translate expertise into clear language, someone who understands your audience and brand, someone who knows how to structure pages with your component library, good media assets, and an SEO/AEO specialist so people actually discover what you made.

Most organizations are missing at least some of these skillsets, and even when all the people exist, coordinating them is where everything breaks down. We believe AI can fill these gaps, not by replacing these roles but by making their expertise available to every content creator on the team.

For large organizations, this means stronger brand consistency, better accessibility, and improved compliance across thousands of pages. For smaller ones, it means access to skills that were previously out of reach: professional copywriting, SEO, and brand-consistent design without needing a specialist for each.

Used carelessly, AI just makes these problems worse by producing fast, generic content that sounds like everything else on the internet. But used well, with real structure and governance behind it, AI can help organizations raise the bar on quality rather than just volume.

Drupal has always been built around the realities of serious content work: structured content, workflows, permissions, revisions, moderation, and more. These capabilities are what make quality possible at scale. They're also exactly the foundation AI needs to actually work well.

Rather than bolting on a chatbot or a generic text generator, we're embedding AI into the content and page creation process itself, guided by the structure, governance, and brand rules that already live in Drupal.

For website owners, the value is faster site building, faster content delivery, smarter user journeys, higher conversions, and consistent brand quality at scale. For digital agencies, it means delivering higher-quality websites in less time. And for IT teams, it means less risk and less overhead: automated compliance, auditable changes, and fewer ad hoc requests to fix what someone published.

We think the real opportunity goes further than just adding AI to what we already have. It's also about connecting how content gets created, how it performs, and how it gets governed into one loop, so that what you learn from your content actually shapes what you build next.

The things that have always made Drupal good at content are the same things that make AI trustworthy. That is not a coincidence, and it's why we believe Drupal is the right place to build this.

What we're building in 2026

The 2026 plan identifies eight capabilities we'll focus on. Each is described in detail in the full plan, but here is a quick overview:

- Page generation - Describe what you need and get a usable page, built from your actual design system components

- Context management - A central place to define brand voice, style guides, audience profiles, and governance rules that AI can use

- Background agents - AI that works without being prompted, responding to triggers and schedules while respecting editorial workflows

- Design system integration - AI that builds with your components and can propose new ones when needed

- Content creation and discovery - Smarter search, AI-powered optimization, and content drafting assistance

- Advanced governance - Batch approvals, branch-based versioning, and comprehensive audit trails for AI changes

- Intelligent website improvements - AI that learns from performance data, proposes concrete changes, and gets smarter over time through editorial review

- Multi-channel campaigns - Create content for websites, social, email, and automation platforms from a single campaign goal

These eight capabilities are where the official AI Initiative is focusing its energy, but they're not the whole picture for AI in Drupal. There is a lot more we want to build that didn't make this initial list, and we expect to revisit the plan in six months to a year.

We also want to be clear: community contributions outside this scope are welcome and important. Work on migrations, chatbots, and other AI capabilities continues in the broader Drupal community. If you're building something that isn't in our 2026 plan, keep going.

How we're making this happen

Over the past year, we've brought together organizations willing to contribute people and funding to the AI initiative. Today, 28 organizations support the initiative, collectively pledging more than 23 full-time equivalent contributors. That is over 50 individual contributors working across time zones and disciplines.

Coordinating 50+ people across organizations takes real structure, so we've hired two dedicated teams from among our partners:

- QED42 is focused on innovation, pushing forward on what is next.

- 1xINTERNET is focused on productization, taking what we've built and making it stable, intuitive, and easy to install.

Both teams are creating backlogs, managing issues, and giving all our contributors clear direction. You can read more about how we are going from strategy to execution.

This is a new model for Drupal. We're testing whether open source can move faster when you pool resources and coordinate in a new way.

Get involved

If you're a contributing partner, we're asking you to align your contributions with this plan. The prioritized backlogs are in place, so pick up something that fits and let's build.

If you're not a partner but want to contribute, jump in. The prioritized backlogs are open to everyone.

And if you want to join the initiative as an official partner, we'd absolutely welcome that.

This plan wasn't built in a room by itself. It's the result of collaboration across 28 sponsoring organizations who bring expertise in UX, core development, QA, marketing, and more. Thank you.

We're building something new for Drupal, in a new way, and I'm excited to see where it goes.

A few weeks ago, I was invited to the Seahawk Media podcast for a chat about Oh Dear , our origin and where we’re headed as a company in the age of AI and easily-copied SaaS ideas.

Do not apologize for replying late to my email

You don’t need to apologize for taking hours, days, or years to reply to one of my emails.

If we are not close collaborators, and if I didn’t explicitly tell you I was waiting for your answer within a specific timeframe, then please stop apologizing for replying late!

This is a trend I’m witnessing, probably caused by the addiction to instant messaging. Most of the emails I receive these days contain some sort of apology. I received an apology from someone who took five hours to reply to what was a cold and unimportant email. I received apologies in what was a reply to a reply I had sent only a couple of days earlier.

Apologizing for taking time to reply to my email is awkward and makes me uncomfortable.

It also puts a lot of pressure on me: what if I take more time than you to reply? Isn’t the whole point of asynchronous communication to be… asynchronous? Each on its own rhythm?

I was not waiting for your email in the first place.

As soon as my email was sent, I probably forgot about it. I may have thought a lot before writing it. I may have drafted it multiple times. Or not. But as soon as it was in my outbox, it was also out of my mind.

That’s the very point of asynchronous communication. That’s why I use email. I’m not making any assumptions about your availability.

Most of the emails I send are replies to emails I received. So, no, I was not waiting for a reply to my reply.

My email might also be an idea I wanted to share with you, a suggestion, a random thought, a way to connect. In all cases, I’m not sitting there, waiting impatiently for your answer.

Even if my email was about requesting some help or collaborating with you, I’ve been trying to move forward anyway. Your reply, whenever it comes, will only be a bonus. But, except if we are in close collaboration and I explicitly said so in the email, I’m not waiting for you!

I don’t want to know all the details of your life.

Yes, you took several days to reply to my email. That’s OK. I don’t need to know that it’s because your mother was dying of cancer or that you were expelled from your house. I’m not making those up! I really receive that kind of apology from people who took several days to reply to emails that look trivial in comparison.

Life happens. If you have things more important to do than replying to my email, then, for god’s sake, don’t reply to it. I get it! I’m human too. If I sometimes reply to all the emails I receive for several days, I may also archive them quickly for weeks because I don’t have the mental space.

If you want to reply but don’t have time, put the burden on me

If I’m asking you something and you really would like to take the time to reply to my email, it is OK to simply send one line like

Hey Ploum, I don’t have the time and mental space right now. Could you contact me again in 6 months to discuss this idea?

Then archive or delete my email. That’s fine. If I really want your input, I will manage to remind you in 6 months. You don’t need to justify. You don’t need to explain. Being short saves time for both of us.

You don’t need to reply at all!

Except if explicitly stated, don’t feel any pressure to reply to one of my emails. Feel free to read and discard the email. Feel free to think about it. Feel free to reply to it, even years later, if it makes sense for you. But, most importantly, feel free not to care!

We all receive too many messages in a day. We all have to make choices. We cannot follow all the paths that look interesting because we are all constrained by having, at most, a couple billion seconds left to live.

Consider whether replying adds any value to the discussion. Is a trivial answer really needed? Is there really something to add? Can’t we both save time by you not replying?

If my email is already a reply to yours, is there something you really want to add? At some point, it is better to stop the conversation. And, as I said, it is not rude: I’m not waiting for your reply!

Don’t tell me you will reply later!

Some people specialize in answering email by explaining why they have no time and that they will reply later.

If I’m not explicitly waiting for you, then that’s the very definition of a useless email. That also adds a lot of cognitive load on you: you promised to answer! The fact that you wrote it makes your brain believe that replying to my email is a daunting task. How will you settle for a quick reply after that? What am I supposed to do with such a non-reply email?

In case an acknowledgement is needed, a simple reply with "thanks" or "received" is enough to inform me that you’ve got the message. Or "ack" if you are a geek.

If you do reply, remind me of the context

If you choose to reply, consider that I have switched to completely different tasks and may have forgotten the context of my own message. When online, my attention span is measured in seconds, so it doesn’t matter if you take 30 minutes or 30 days to answer my email: I guarantee you that I forgot about it.

Consequently, please keep the original text of the whole discussion!

Use bottom-posting style to reply to each question or remark in the body of the original mail itself. Don’t hesitate to cut out parts of the original email that are not needed anymore. Feel free to ignore large parts of the email. It is fine to give a one-line answer to a very long question.

I’m trying to make my emails structured. If there are questions I want you to answer, each question will be on its own line and will end with a question mark. If you do not see such lines, then there’s probably no question to answer.

If you do top posting, please remind me briefly of the context we are in.

Dear Ploum,

I contacted you 6 months ago about my "fooing the bar" project after we met at FOSDEM. You replied to my email with a suggestion of "baring the foo." You also asked a lot of questions. I will answer those below in your own email:

In short, that’s basic mailing-list etiquette.

No, seriously, I don’t expect you to reply!

If there’s one thing to remember, it’s that I don’t expect you to reply. I’m not waiting for it. I have a life, a family, and plenty of projects. The chance I’m thinking about the email I sent you is close to zero. No, it is literally zero.

So don’t feel pressured to reply. Should you really reply in the first place? In case of doubt, drop the email. Life will continue.

If you do reply, I will be honored, whatever time it took for you to send it.

In any case, whatever you choose, do not apologize for replying late!

About the author

I’m Ploum, a writer and an engineer. I like to explore how technology impacts society. You can subscribe by email or by rss. I value privacy and never share your adress.

I write science-fiction novels in French. For Bikepunk, my new post-apocalyptic-cyclist book, my publisher is looking for contacts in other countries to distribute it in languages other than French. If you can help, contact me!

February 10, 2026

"Buy European" is becoming Europe's rallying cry for digital sovereignty. The logic is intuitive: if you want independence from American technology, buy from European companies instead.

However, I think "Buy European" gets one thing right and one thing wrong. It's right that Europe benefits from a stronger technology industry. But buying European does not guarantee sovereignty.

Sovereignty is not about where a company is headquartered or where software was originally written. It is about whether you retain "freedom of action" over the technology you depend on, even if the vendor changes strategy, gets acquired, or disappears.

The right question to ask about any technology: if conditions change, do you retain the freedom to keep using, modifying, and maintaining this software?

When evaluating sovereignty, it is not enough to ask how much control you have today. You also need to ask how much of that control is structurally protected, built into the legal and community foundations, so it can't be taken away tomorrow.

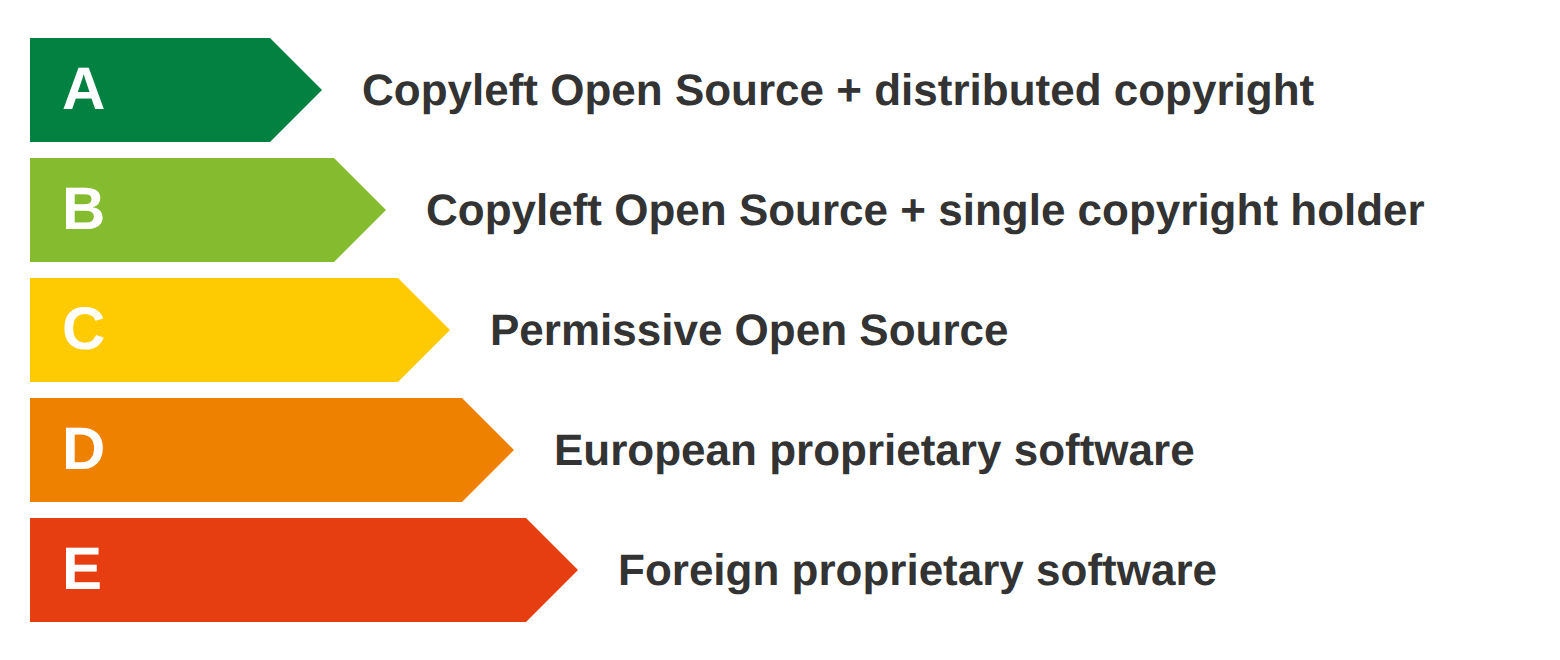

The proposed scale measures structural protection. It is not a ranking of openness, nor does it capture every dimension of sovereignty. The scale also does not imply that one license is always better than another.

The most important distinction in the scale is between Open Source and proprietary. All three green grades give you freedom of action: the right to use, modify, and maintain the software independently, forever. The differences between A, B, and C reflect how structurally protected that freedom is against acquisition, relicensing, or ecosystem shift. They also determine how much you risk being left behind if the project's direction changes.

I used five levels, modeled on Europe's familiar A-through-E labels for energy efficiency and food nutrition, from structurally sovereign to fully dependent. Frameworks like the European Commission's Cloud Sovereignty Framework do not yet make these structural distinctions. This scale aims to improve on what exists and is used today, and I expect it to improve further through scrutiny and feedback.

| Type | Can someone take it away? | Examples | |

|---|---|---|---|

| A | Copyleft + no relicensing risk | No. The code cannot be relicensed, and all derivatives must be Open Source forever. | Linux, Drupal, WordPress |

| B | Copyleft + relicensing risk | No. All derivatives must be Open Source. But future versions can be relicensed if copyright is concentrated. | MySQL → MariaDB |

| C | Permissive Open Source | No. But the license allows proprietary derivatives that can shift value away from the open project. | Redis (relicensed), Valkey (fork) |